Answering my own question^: yes, my intuition was right, if you have a cache size of 8kb and you always access memory in increments of 8kb you'll absolutely trigger this problem. :)

@mziv Yes! This is a common bad practice called thrashing.

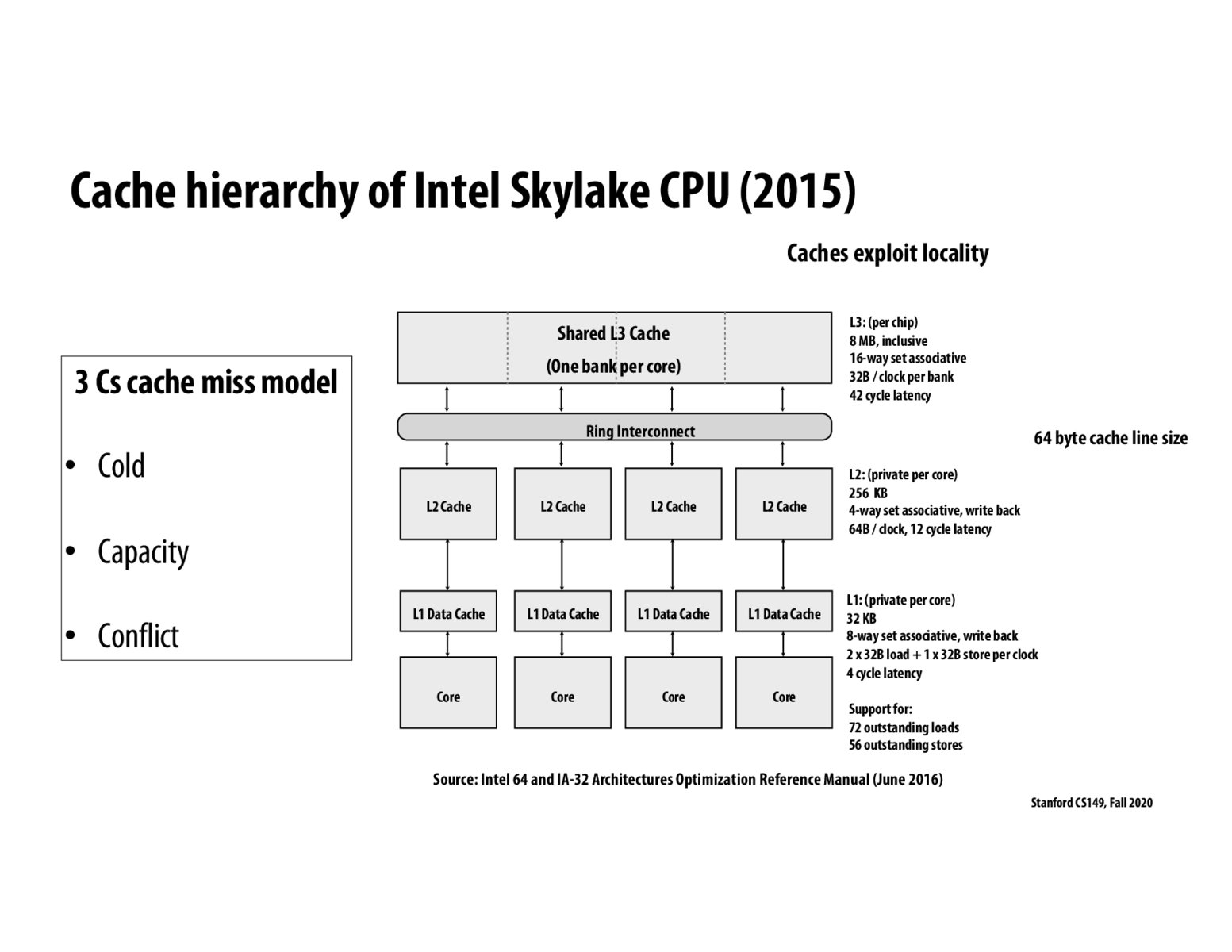

Why is the L2 cahce in this case 4-way set associative, which is smaller than L1 cache which is 8-way set associative. I was imagining as we go higher in the hierarchy, we want more capabilities.

^ This is a really good question. I've been doing a little bit of reading about it, and it looks like the associativity between the L1 and L2 cache doesn't have to be that different (Before Skylake, both L1 and L2 caches on Intel chips had 8-way associativity). Even the chip Wikipedia doesn't think that it's a big deal that the L2 cache became 4 way set-associative on this chip. One response I was reading noted the tradeoff between parallelism and power consumption (Less set-associativity can lead to fewer relative parallel tag-queries, which will save power). The writer noted that on the L1 cache, the performance-power tradeoff might make sense, but on the L2 cache, it might not if the ratio was less favorable. Not sure if this is 100% on the money, but it seemed pretty compelling.

Chip Wikipedia https://en.wikichip.org/wiki/intel/microarchitectures/skylake_(client)

If my understanding is correct, more N-way associativity means that you have to check 1/Nth of the lines in the cache for the desired tag. Maybe the fewer lines you have to check, the faster the operation is? I don't quite understand how the tag query operation is implemented.

The following website describes well the meaning of caches. https://www.geeksforgeeks.org/types-of-cache-misses/

From my understanding from the lecture, cache miss means unavailability of data in cache. At this cache miss, a requested data is received from the main memory. Cold miss means this miss when the data is first requested because there is no data stored in cache. Capacity miss indicates when the requested data is not saved due to capacity limit. Conflict miss occurs when the cache memory is allocated only for each group. In this case, even though there are available spaces in other cache memory, one block might suffer from capacity limit and then lose the stored data.

Going to try to define these type of cache misses: - Cold miss: this is a miss because it is the first time accessing a particular memory address. These misses will occur even if you have an infinite size cache. - Capacity miss: this occurs because the cache has finite size, so misses happen when all the cache lines are full - Conflict miss: In a non-fully-associative cache, when memory elements map to the same sets, you have to evict the blocks in that set even if there are parts of the cache that are not occupied. In a fully-associative cache, conflict misses by definition cannot occur because addresses can be stored anywhere in the cache

I just want to make sure I understand the concept of set associativity in regards to conflict misses. So, this set associativity (lets say it is n) basically allocates n spaces for cache lines starting with a certain tag, and you can only keep at most n many cache lines with that tag in the set. So, does a conflict miss occur when you are pulling in the n+1th cache line matching that tag and you have to evict one of the entries from the tag's set?

@viklassic. Correct. A conflict miss occurs when the working set is smaller than the size of the cache, but a miss still occurs when accessing data that had been previously read. Had the cache been "fully associative" (any line can go anywhere), there would not have been a miss. A fully associative cache will not suffer from conflict misses, only cold and capacity misses.

I think in lecture Professor Kunle mentioned that we have higher associativity caches would be slower and lower associativity caches would be faster. Thats why we have L1 and L2 having lower associativity than L3. In terms of misses increasing as we go from higher associativity to lower associativity the idea was that from L3 to L1 we have adequate badnwidth so we allow for the degradation as the main bottleneck is main memory to L3

Found this link to be very helpful: https://www.youtube.com/watch?v=r5vY6OFSB04 It is difficult to summarize this video, the lecturer goes through set of examples of three Cs of cache miss model. If you are having difficulties understanding three Cs, (especially the conflict misses), this video might be a good resource.

Please log in to leave a comment.

Are certain memory access patterns more likely to cause conflict-based cache misses? Like if you do a lot of accesses spread across memory % cache size or something?