Yes Spark can recover from crashes, but do we ever need to adjust our approach to reduce the possibility of crashes rather just re-running the computations? For instance, if a job is too taxing on one node and causes it to die mid-computing, would it be fair to assume the next node that job is re-run on would also die? Or do we attribute the crashes to demarcation of hardware that may vary from node to node?

@jgrace That's an interesting point - I haven't thought about in that way. In my mind, whenever node failure was mentioned, I've imagined the power going out in the node's rack or some hardware failure on the node, but perhaps it is also possible for software to exhaust a node's capabilities. If so, I think this software issue would be a problem for not just Spark but any other distributed computing service.

@jgrace It seems that if the workload for one node failed, the scheduler could re-partition the workload and distribute to other free nodes, as described in WA3 Practice 1C soln. This would effectively alleviate the next node crash.

Please log in to leave a comment.

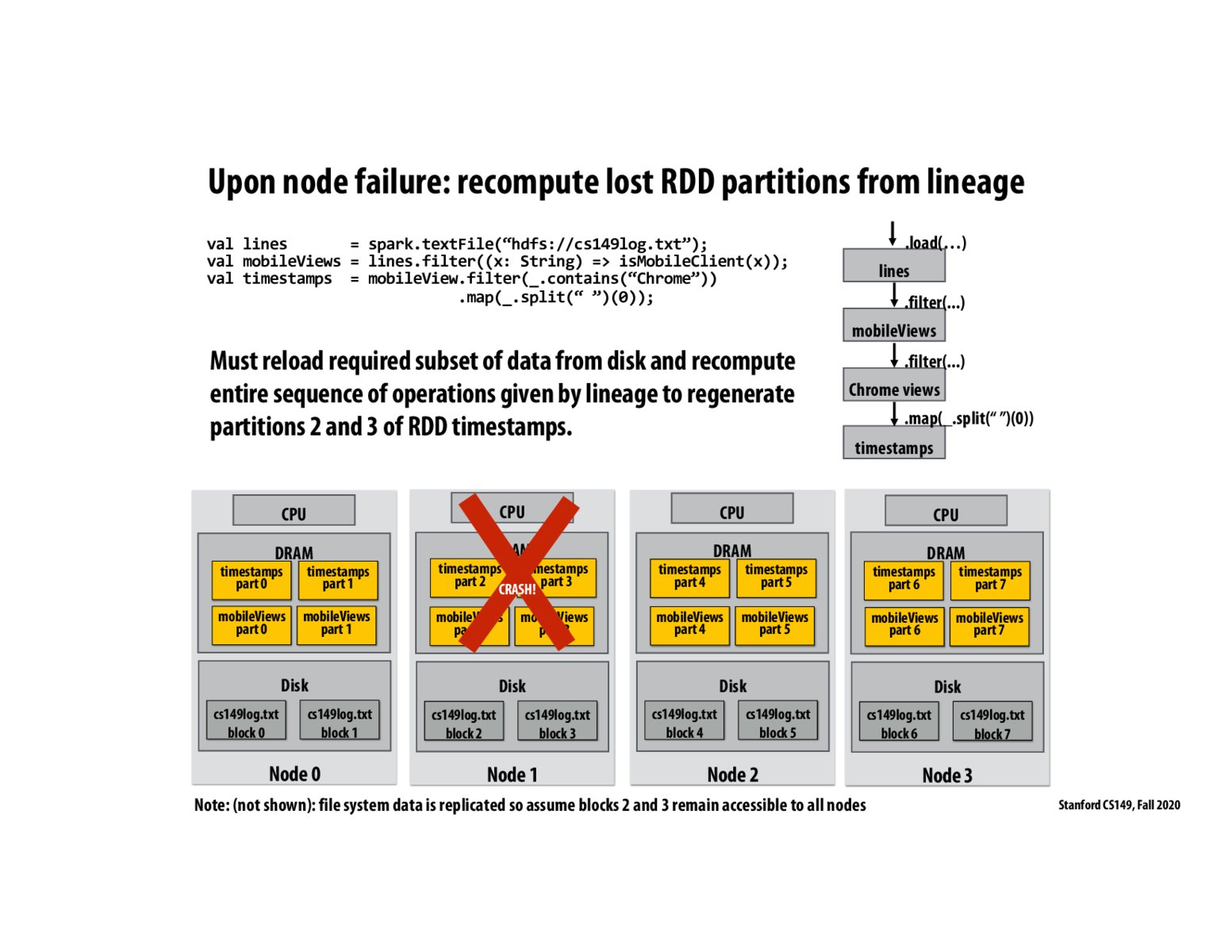

As Professor Kunle explained, Spark keeps a "lineage", or list of the transformations to form the RDD partitions, so that if there is a crash (usually hardware-induced), the lineage can tell Spark how to redo the computations starting from ground 0 (data that starts in disk) and regenerate partitions of the RDD.