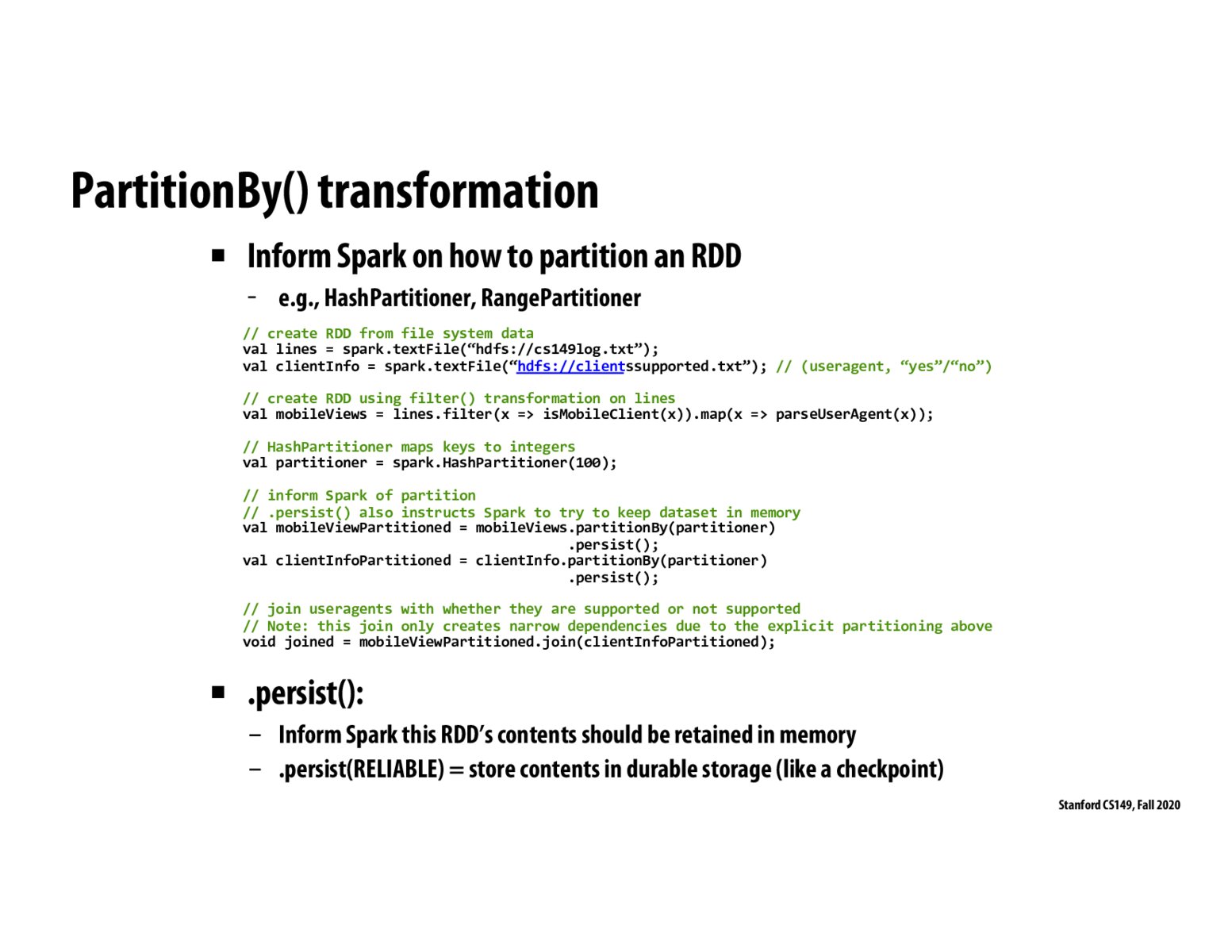

@Nian I think a similar question came up in the chat during the lecture and Kayvon made the point that the baseline Spark system does indeed partition/aggregate data across many machines automatically. However, as we saw in previous slides, this can sometimes lead to really "wide" dependencies when it comes time to do operations like sort and groupByKey, which introduces global computation on top of many locally partitioned blocks of data. However, by giving the programmer the ability to manually partition their data using a custom hash function, we can enable much better performance than could be achieved automatically. This is because if the programmer specifies a hash function that requires "similar" data to live on the same machine, the groupByKey and sort operations now have much narrower dependencies, reducing inter-computer communication and improving performance. So to summarize, the Spark system can do all this automatically (and will as a default) but giving the programmer hooks to manually control these instructions can result in much more performant code.

I am still a bit confused about .persist(). What is the difference between persist in memory vs. persist in disk since I believe memory is not persistant storage?

@thread17 I think the persist in memory is more like a design to tell that some intermediate results will be reused. A link that helps me understand: https://sparkbyexamples.com/spark/spark-difference-between-cache-and-persist/

@thread17 I think persist() doesn't refer to "persistent storage" in the way you're describing, and I agree that it's a bit confusing. As @orz said, it just a way to indicate that the node will be re-using the intermediate value, so it should "persist" and stick around in memory. If a fault occured, you're totally right that the value would not "persist" if it was stored in memory. However, the benefit of the deterministic lineage transformations is that we can recompute this RSS and re-persist() it to continue with the computation.

It appears that persist(RELIABLE) has a similar effect to saving intermediate results in a distributed file system. When would we want to call persist(RELIABLE) while using Spark but not want to use a distributed file system?

Once we call persist, how long are these values kept in memory? Is there a call that flushes the values, once you are done using them? Is a call like this necessary?

Here's a link for anyone who is curious about range partitioning in Spark. https://stackoverflow.com/questions/41534392/how-does-range-partitioner-work-in-spark

PartitionBy takes a supplied hash and returns a new RDD with the specified number of partitions. So if we run a groupby for example and everything ends up in 1 partition, we can use PartitionBy to break up the large partition into smaller ones.

Please log in to leave a comment.

For join, why cannot the spark system automatically decide to first partition then join? I think some sql server does join exactly in this way, right?