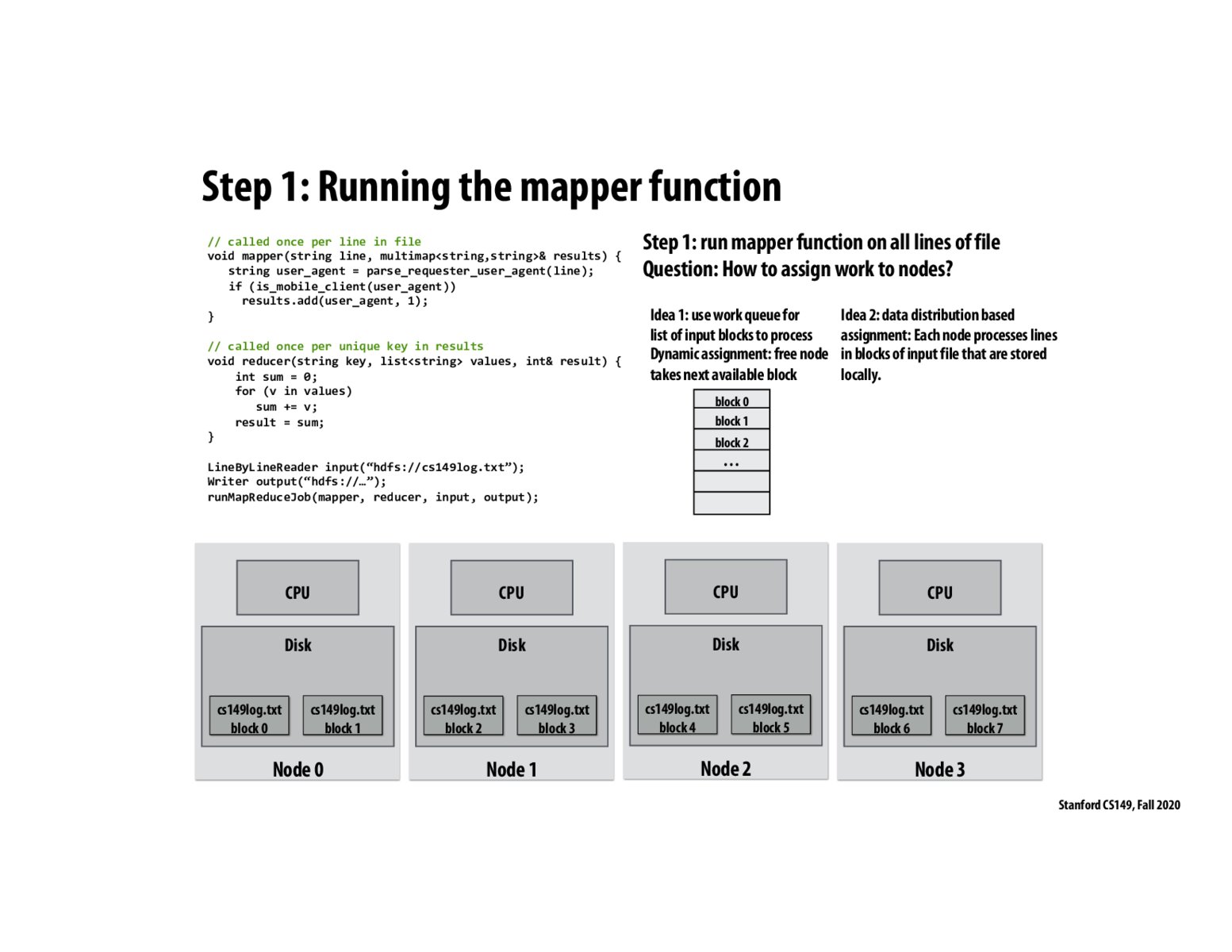

As nickbowman said, the idea 1 has potential issue related to communication. Even though this might lead to balanced work loads, this might cause communication overhead and thus whole process might become slower.

Can we maintain a evenly distributed local storage when storing all the file blocks to nodes? I think if it is possible, we can combine the two methods and achieve balanced workload while doing computation locally.

I think that you're definitely able to achieve an "evenly distributed" local storage in the sense that the file sizes for each block on each individual machine would be the same. However, I don't think that implies that there would be workload balance because the inputs to the work process might take unequal times to run to completion (maybe some inputs take more computation power, or some of the CPUs in the individual devices are weaker, etc.).

To actually achieve workload balance with this sort of static assignment assigning input blocks to nodes that actually have those block stored locally, we would need to have some prior knowledge of what kind of work each block entails.

Perhaps we could implement some system (like Cilk) that tries to take the best of both worlds; each node has dedicated tasks as per Idea 2, but if a node runs out of work, it could steal work from others over the network. This would be less efficient than if we had load balanced properly ex ante, but might be more resilient to some of the issues discussed in lecture around heterogeneous node speeds/task complexities.

Please log in to leave a comment.

Idea 2 is a better workload distribution strategy here (assuming computation on each of the file blocks takes roughly the same amount of time) because it brings locality to the computation ("brings the compute to the data" by making the CPU do data processing on the data stored on its local disk) and reduces the need for communication across the network.