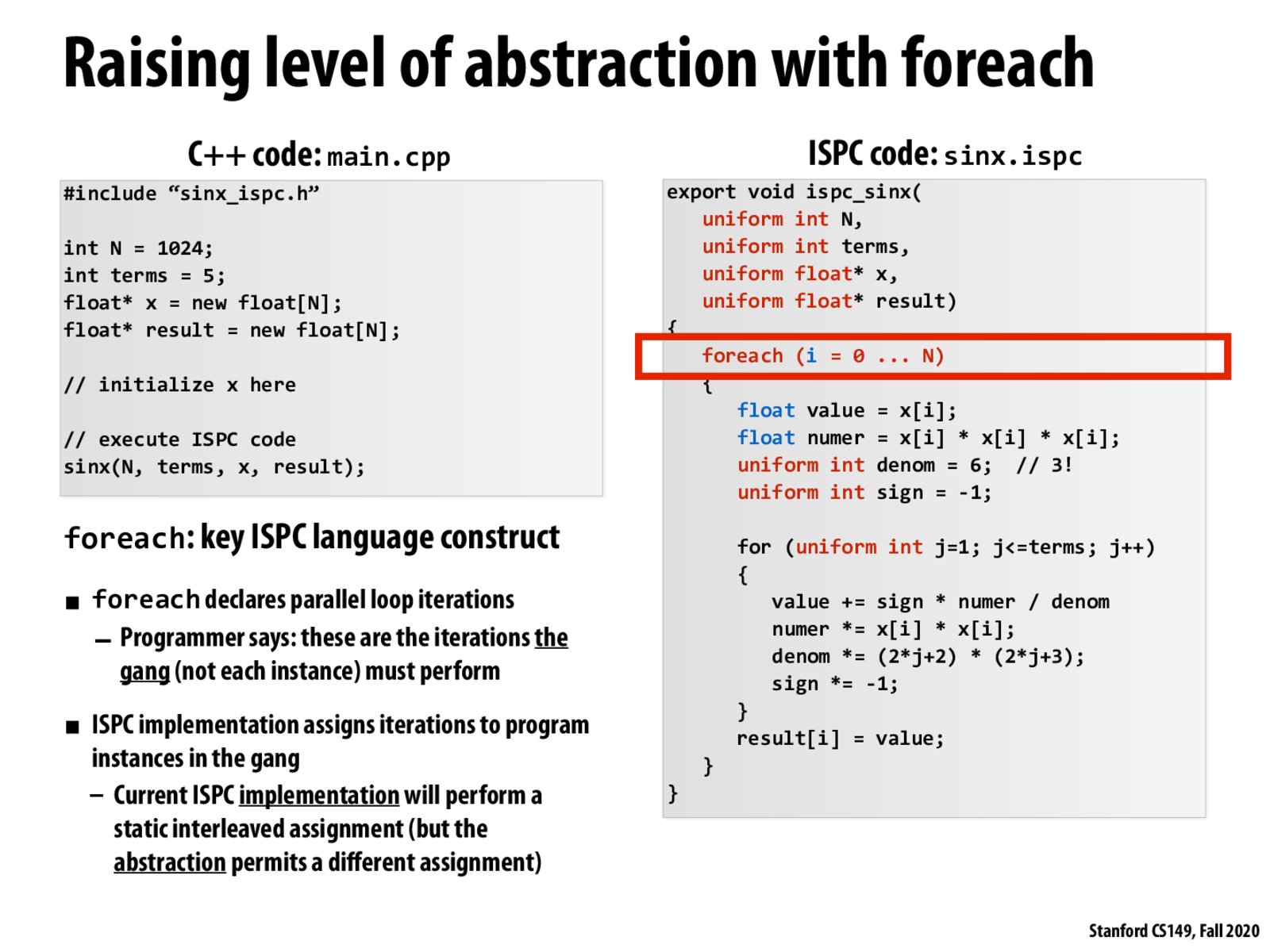

Why does this foreach abstraction not exist in other languages (C, C++, maybe even Python etc)? Have I just not looked hard enough?

It seems like you could write a library that had a "foreach" loop and if the body met certain conditions (like no conditionals), it wouldn't even be that hard to write.

Is there a big tradeoff? Would that add too much complexity? Or does it exist and have I not looked hard enough?

@pmp, I think it depends on the language, and some definitely exist! In this case, I think ISPC's foreach abstraction relies on the assumption that the program will be run on Intel SIMD processors, so it's very specific to the architecture and specific instructions. However, I know that Rust, much of which is designed to make concurrency safe and easy, has a crate (library) called Rayon, which provides parallel iterators. The programmer can write what looks like sequential code iterating through an array, and Rayon will dynamically divide the work into tasks. Also, from a quick Google search, Python seems to have a multiprocessing library with a "Pool" abstraction, which also allows for similar sequential-looking code to run in parallel.

https://github.com/rayon-rs/rayon https://stackoverflow.com/questions/5684992/how-can-i-parallelize-a-pipeline-of-generators-iterators-in-python

@jchen I have heard that Python is very difficult to parallelize due to global interpreter lock (GIL). Using threads introduce no performance gains for many applications. Since python is such a high level language, I don't believe it provides any ability for me to specify "I would like these independent instructions to run on vector-capable ALUs". I would love to see more discussion on this, since a language as easy to write in as Python and as data-parallizable as C will be...A holy grail?

@pmp I think most C++ compilers do auto-vectorization now (with some flags enabled). If you write a for loop the compiler will try to parallelized it by SIMD for you if possible.

Please log in to leave a comment.

Seeing this first reminded me of OpenMP'a "#pragma omp parallel" compiler directive that gets set above a for loop. But this is very different as OpenMP will divide the for loop into separate threads instead of interleaving the compute using SIMD instructions.