I believe yes it's based on the slowest non-parallelizable fraction of the routine.

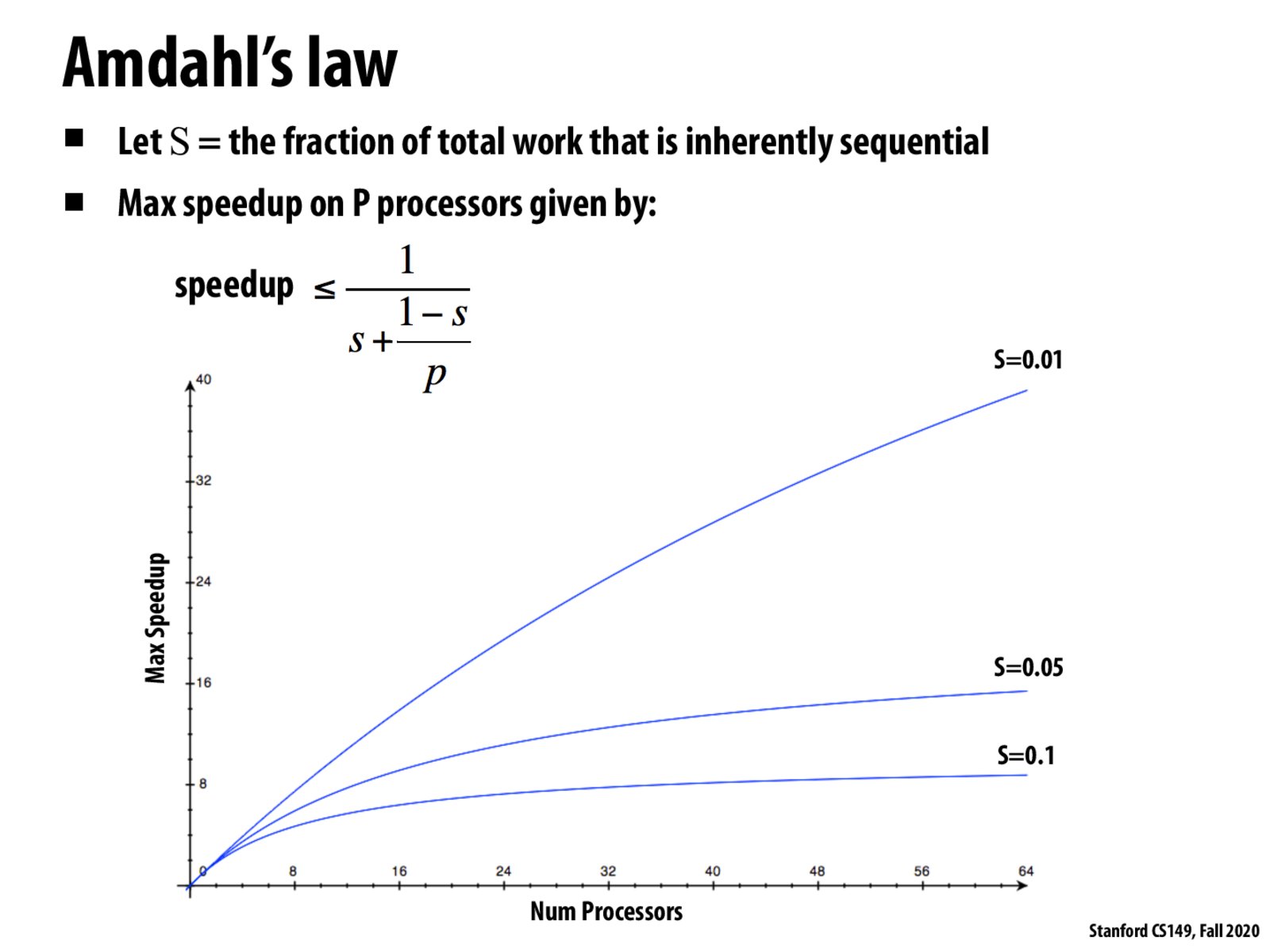

The main idea of Amdahl's law is that no matter how fast your computer is, dependencies will always be one of your most significant limiting factor in preventing speed ups by a factor of S where S is the fraction of total work that is inherently sequential.

If I'm not mistaken this is just another way of trying to understand the graph on: http://cs149.stanford.edu/fall20/lecture/whyparallelism/slide_35, since there it also shows an upper limit on the amount of speedup we can achieve using only ILP.

In my mind looking at both Amdahl's law and Diminishing returns to superscalar execution, it has solidified the importance of learning to write software code that is hardware AWARE! In both cases the limiting factor on the speed up is the fact that some programmatic logic that must be run sequentially. Looking forward to learn the techniques in this class!

In order to ensure optimal performance, dependencies should be minimized as much as possible. Even a small amount of inherently sequential work can drastically reduce the maximum potential speedup from multiple processors.

In another class I am taking the professor brought up an interesting point that some people view as a shortcoming of Amdahl's law: it assumes that the problem size stays fixed as parallelism increases. One of the examples he gave to illustrate this was the problem of rendering some graphical image. In this case, the person doing the rendering can make use of newly added processors by simply decided to render at a higher level of detail, increasing the parallelism in the problem.

@Jonathan: That's interesting. I think the key problem is that S is defined independent of P. If it depends on P, then adding more parallelism would potentially lead to a lower S (according to how the program changes its behavior when it gets more processors). It seems that determining S based both on the program itself and the number of processors to be used would fix the issue? If the program behavior doesn't change with P, then the law would work as it is. If the program behavior does change with P, that change would lead to a different S.

Please log in to leave a comment.

Based on this would you say your program is only as fast as it's slowest fraction?