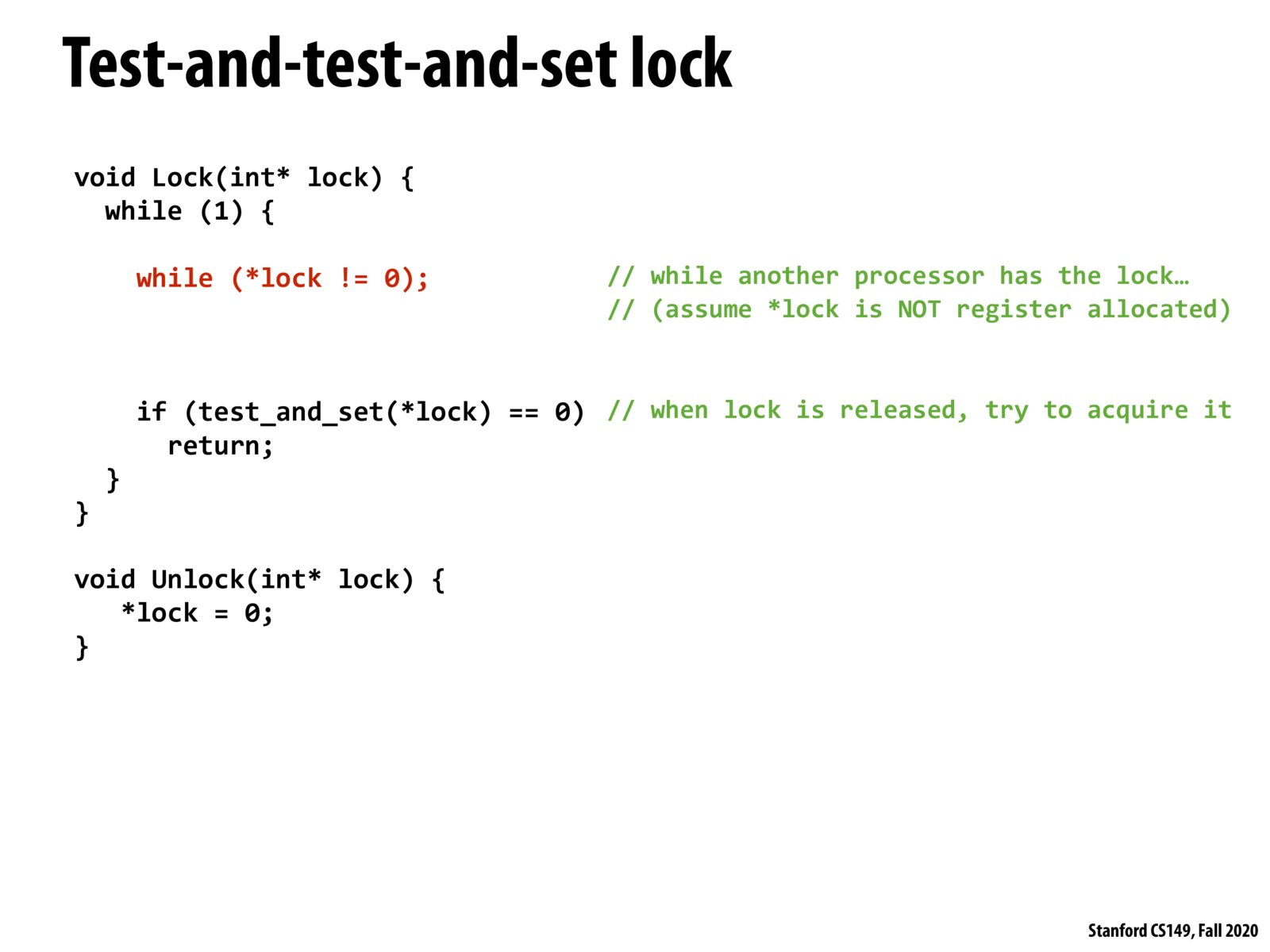

From this example, we see that since reads are less destructive/impactful to cache coherence policy, we are able to make a tradeoff of doing some extra read operations to reduce the number of superfluous write attempts that get sent out on the cache coherence bus, which helps reduce over all communication congestion and improve performance.

One important aspect of this nested while loop is the fact that it's possible for the while loop to stop (meaning the lock is released), but the thread is still unable to acquire the lock because another thread has acquired it first. This seems to be an interesting parallel to condition variables, where in assignment 2 the condition variable wait needed to be wrapped in a loop (or in C++ the wait function handled that loop for you). Though it's a little different since condition variables don't use a spin lock, it's similar in that knowing the lock is available is not the same thing as being able to acquire the lock, which is why this nested loop is necessary.

The inner while loop keeps checking to see if the lock has been released. If so, it exits the inner while loop, but then uses another if statement, because the lock could have been taken by another thread in the time between the inner while loop and the if statement.

This approach is better since the other threads attempt to write to the lock value (in the if statement) only when the lock has been released by the thread that was holding it. This reduces the overall number of writes (as @steliosr and @nickbowman mentioned) and is hence much better for cache coherence since lock value is not jumping around between threads even though it has been captured by one thread the whole time.

Please log in to leave a comment.

Since threads are all reading the lock, instead of attempting to write it, they can all share the cache line in S state, and avoid unnecessary cache coherence traffic in the bus.