Yes, reconfiguring and compiling FPGAs can take amounts of time and they are generally difficult to program because they require instructions in low level languages like Verilog. Spatial can give developers more freedom to experiment as it takes care of details like banking and buffering of memories, generating the exact hardware code, and giving statistics to help debug performance.

I'm still a little confused about why FPGA compilation takes so much time. What does compilation for an FPGA entail? What is the "bottleneck" for the compilation process?

I actually think that the compilation time of FPGA is reasonable - only a few hours. if you want to tape out an actual chip, you might wait for months for manufacturing. FPGA is a great option in between that provides a not-to-long compilation time while a programmable device. @gpu Not exactly sure what is happening at the lower level, but I guess it's trying to implement the synthesized netlist diagram your Verilog code produced onto the actual circuit with the same functionality. This is a really complicated diagram and I think it makes sense to take longer time to map all circuits to the hardware.

@gpu I think the problem generally falls under the name Place and Route or Bin Packing problem which is NP hard.

The same problem exists in other hardware layout like PCB design. From past experience designing a PCB requires first taking a schematic, then putting all the components onto the board. Then the longest part is routing all of the wires connecting the components so no wires collide. Sometimes you need to move components around so the wires fit and this could take weeks to do if you have a reasonable PCB to layout by yourself. All these same steps would be needed to be done by the compiler that maps the HDL to the FPGA.

I'm guessing randomized approximate algorithms might speed up layout, but could have a probability of issues leading to clock skew or other hardware bugs.

What is a good way to understand the performance improvement brought by FPGA?

When we write and compile FPGA, is it actually modifying the underlying physical hardware structure or is it still just a software instruction stream that specifies how to perform certain operation?

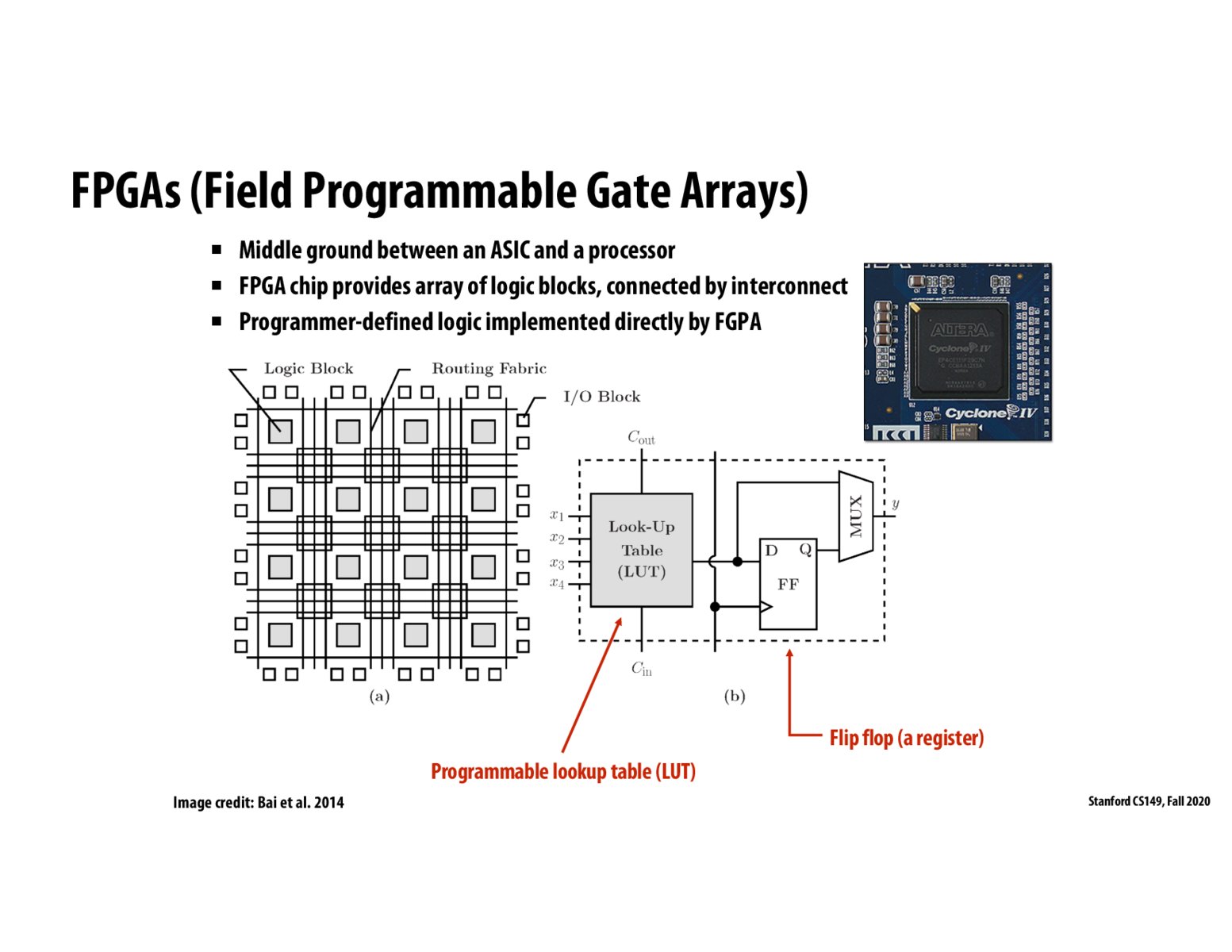

@wanze as @ChrisGabor stated compiling is a place and route problem where the compiler determines the logic configuration needed to achieve the specified functionality without exceeding the limited number of gates in the FPGA. When we synthesize RTL on an FPGA it is actually modifying the hardware logic in it by reconfiguring how the gates are connected. It almost like an ASIC but not as fast because now we have communication overhead due to the extra logic that's sitting around in the FPGA.

@wanze One way of thinking about why an FPGAs (or other specialized hardware) is preferable to something like a CPU because in practice, most workloads on a CPU will spend an overwhelming amount of time on work that is not inherent to the problem (ie things like control flow) which can be baked into the hardware. CPUs are great because they can run a large variety of programs, but that generality comes at a cost (performance). This is very similar to the trade off between DSLs and general langauges.

Please log in to leave a comment.

FPGA compilation can take a considerable amount of time, as it has to deal with heterogeneous hardwares, wirings, etc.