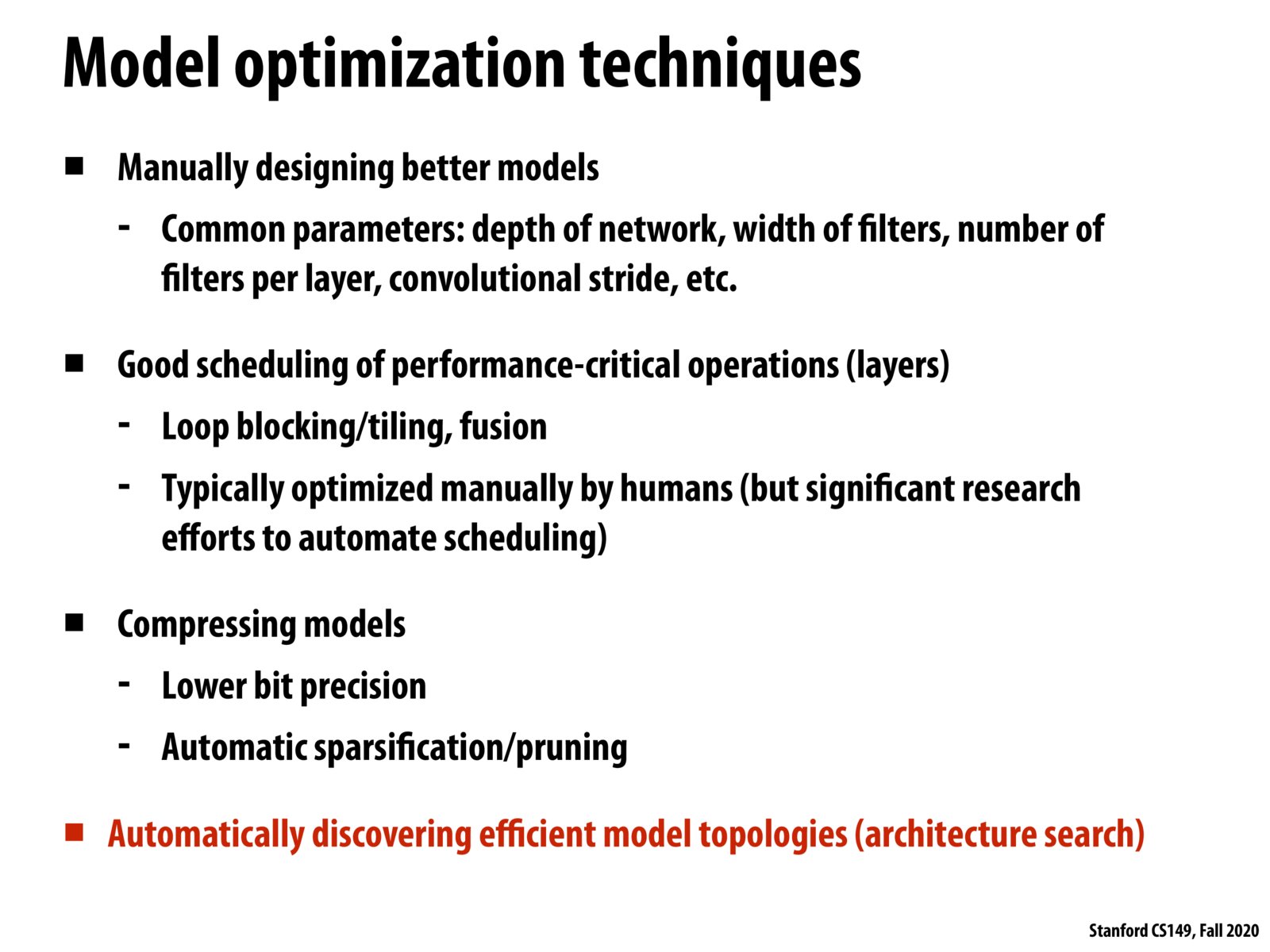

There are multiple dimensions on which DNNs can be optimized. Systems programmers may be interested in hand-optimizing code in order to more efficiently evaluate layers (eg through loop tiling, fusion, and SIMD utilization), as well as compressing data for energy efficiency (while maintaining accurate results). ML programmers can design more efficient models for their networks, and even automatically tune their model parameters to converge to an efficient solution. Finally, the hardware itself can be more tightly specialized to the domain - for example, NVIDIA can design cores that specialize/are optimized to 4x4 matrix multiplies.

There have been various frameworks for architecture search. AutoML is one of these examples which provides automatic architecture and hyperparameter tuning.

Please log in to leave a comment.

Learning is learn is such an effort that tries to automatically search for the best model architecture, for example, one famous paper (with a nice name as well) is "Learning to learn by gradient descent by gradient descent": https://arxiv.org/abs/1606.04474