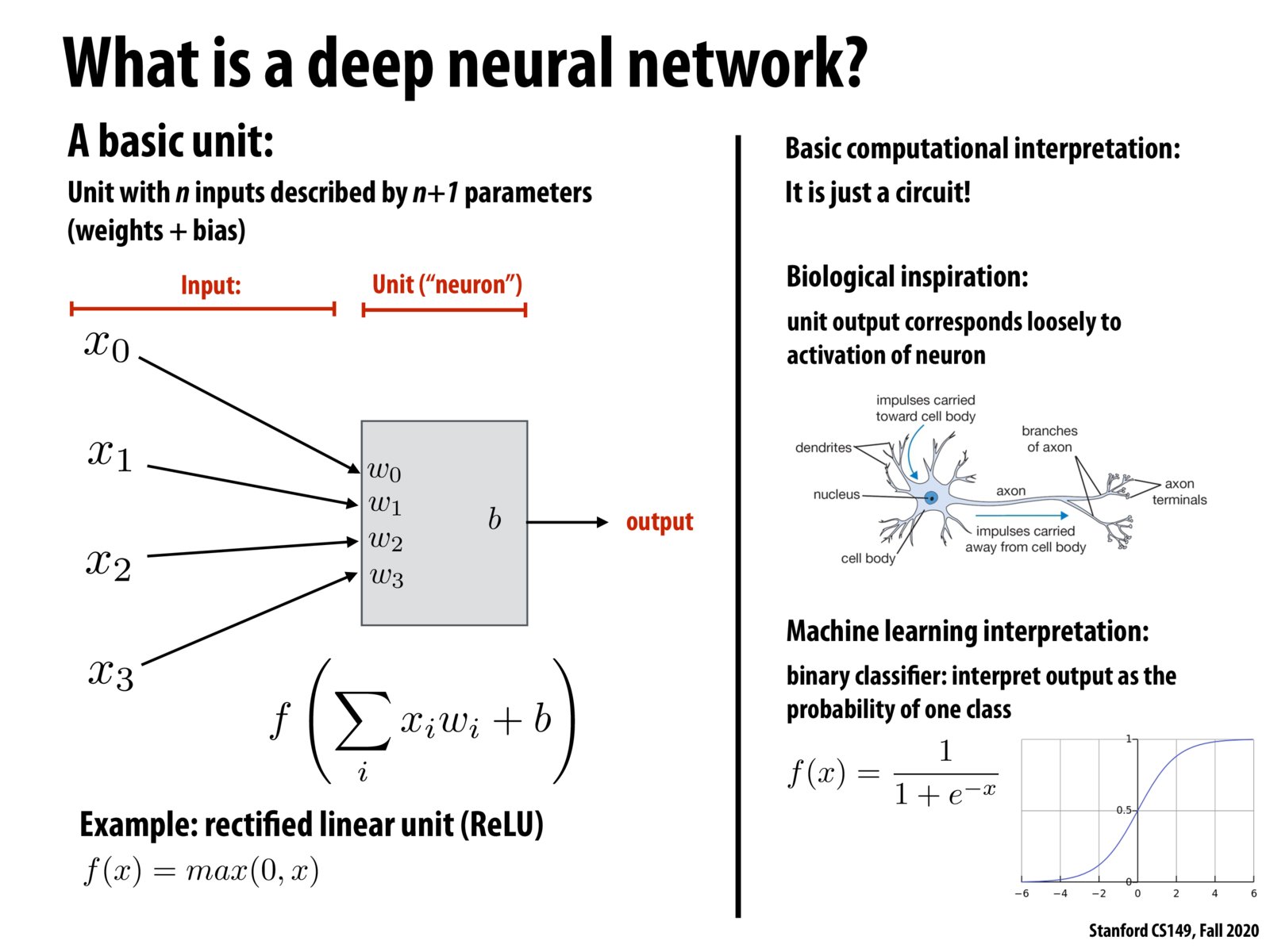

The basics of Neural Network is to compute a dot product of input (xi) and weight (wi) and add bias term (b). Then the computed values are input to the activation function (f), which is a nonlinear function. One of the examples of an activation function is ReLU. By composing this basic building block, we can develop a deep neural network (DNN).

I wonder whether the existence of GPUs and knowledge of parallel computing played any role in the development of deep learning - for example, were any researchers motivated to construct neural networks in such a way that the computations could be easily parallelized?

Just a random question, I am always wondering what makes a network "deep". Is there any definition?

@orz I think what makes it deep is that the network sort generates it's own understanding of the problem as it refines the weights.

@felixw17, the idea of an artificial neural network was proposed independently of GPUs or parallel computing, including the concept of having multiple hidden layers. However, the huge efficiency provided by GPUs and parallel systems enabled researchers to run bigger and deeper knowledge that they could only fantasize about in the past. This enabled tremendous advances in the deep learning field over the past 15-20 years. Without these efficient systems, many of the innovations in deep learning today wouldn't have been possible. For example, you could never run really deep networks with billions of parameters if you didn't have the power of GPUs / parallel clustered machines, so many ML papers and many awesome architecture designs (that ultimately led to state of the art accuracy on fundamental benchmarks like image recognition) would never have been possible! If you take an ML class you might hear Andrew Ng mentioning the rise/improvement of compute as one of the main reasons behind the success of deep learning today.

Bigger and deeper networks*

@orz, "deep" simply refers to a network having many layers (anything more than a couple?). Having many layers makes the network more expressive and enables it to learn more complex representations. Almost all of the cool AI results of the past 1+ decades have been the result of deep networks.

Please log in to leave a comment.

For all the other people who haven't taken an ML class!

activation function: Function that decides the output of a node based on a set of inputs

ReLU definition: The most common activation function in deep learning models... it simply returns 0 if the input is negative or the input if the input is positive!