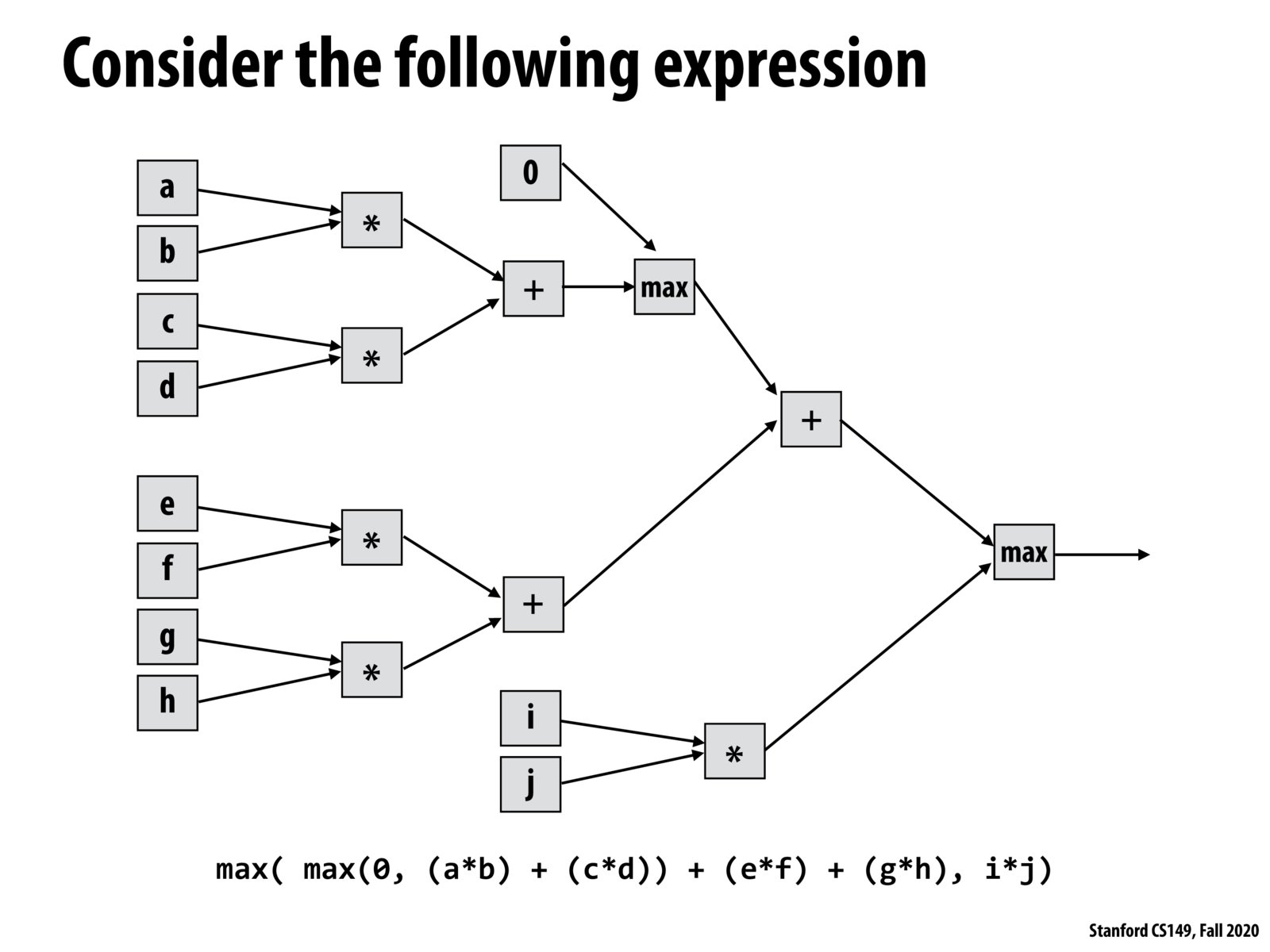

The computation graph is built up according to the structure of the model. Each node represents an operation while the leaves are inputs to the model (the data, weights, biases). It is often said that gradients "flow" through the graph to allow the model to make small adjustments to the weights and biases in a way that will enhance the model's predictive abilities. Computing the gradient of the final model output with respect to each input variable seems like a difficult task to do at once, but using the computation graph breaks this procedure down into self contained steps at each node. Each node receives the gradient from its output and routes the gradient appropriately to its inputs depending on the operation, which then routes the gradients again until they reach the bottom of the tree. Then the update step can be performed on the weights or biases. This is how deep learning frameworks like TensorFlow and PyTorch perform backpropagation efficiently.

There is a very good description of this process here: https://cs231n.github.io/optimization-2/

i wonder if things could be made more efficient by turning the floating point multiplications into fixed-point integer multiplications?

Please log in to leave a comment.

Computation graph is one of the fundamentals for deep learning computations, for both forward propagation and backward propagation. It also enables automatic gradients, which are essentially for training through back propagation.