@zecheng, it makes the quick turn around of loading/storing a variable faster. Per the previous slide, the main motivation for MESI is to reduce the overhead of a common operation pattern: load, modify data, store modified data. So, I think your intuition is correct: it's streamlining the operation pattern where a single core is making a quick change to some data.

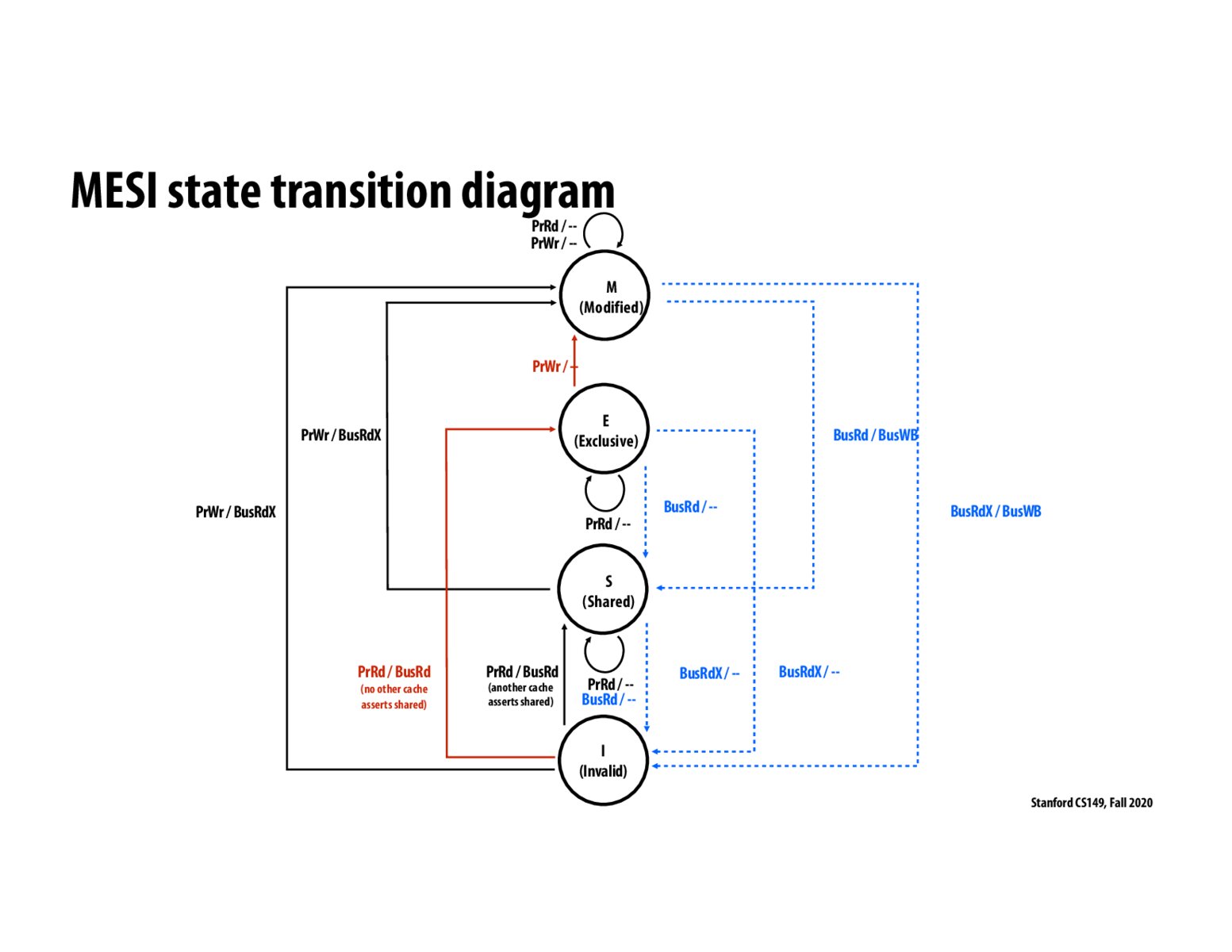

As the diagram shows, once in the M state, reads and writes are hits, and cause no state transitions or communication on the bus. This is convenient, since we wouldn't want repeated reads and writes on the same core to cause unnecessary communication overhead as well as traffic on the bus. The only reason to communicate further on the bus about the particular cache line would be another core trying to read/write it, which may or may not happen.

If two processors had been sharing a value, but one is now done. Is there a way for it to let the other know it is done and it can use the exclusive state to edit the value?

This diagram shows what happens in just one processor, correct?

@mkarra -- correct. You can think of this diagram as defining a state machine for one cache line in one processor's cache. Events that trigger changes in state are either LD/ST operations by the local processor (black and red lines), or coherence messages from other processors (blue lines).

How does a cache controller know if no other cache would assert shared on a line without doing a bus transaction. Does BusRd do this automatically, in case the line is in the modified state somewhere else? In that case does this also happen in MSI?

@ishangaur. You are asking about the implementation of a shared bus, which is outside the scope of this course. However, here's a 30 second version: You can think of a shared bus as a collection of wires connecting all processors sharing the bus. For example a 16-bit bus would have 16 lines for data. There are additional bus lines for bus requests, and for reporting snooping results. So imagine a single line connecting all processors that is the AND of a "snoop done" line from each processor. Processors set their "snoop done" line to true when they've completed the snoop, and when the overall AND of these results is true, then all caches know all others have completed their snoop.

Therefore, when a cache "shouts out" BusRdX on the bus, it needs to wait for the snoop done line to become true before continuing. Only at this point is it guaranteed that all other caches have performed the required actions. i.e., in this case dropping the line.

Please log in to leave a comment.

So "Exclusive" just makes single-core caching usage easier and faster?