It was also mentioned later in the lecture that different bus arbitration policies/algorithms can be used to manage access to the bus in a "fair" way to make sure that many messages getting produced by one process don't cause starvation for other processors that need to do memory operations.

^ That sounds pretty interesting. Are we going to learn more about cache management in this class, or should we go looking for more info about this on our own?

@nickbowman Does this locking of the bus happen on every read/write operation, even for different memory addresses? Or is the bus somehow able to distinguish between memory addresses that would map to different cache lines and lock only for conflicting memory addresses?

Please log in to leave a comment.

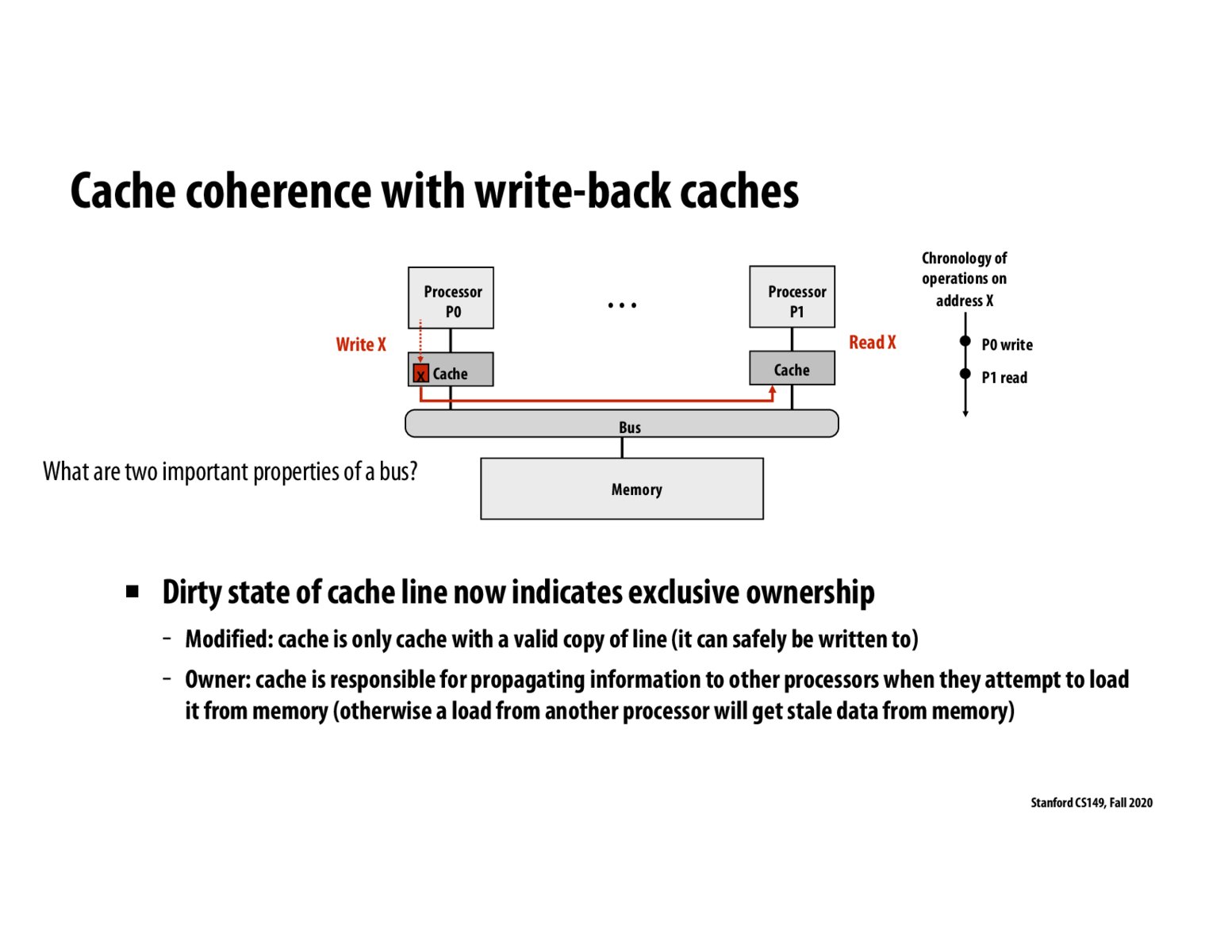

One of the important properties of a bus in this situation is that two processors should not be able to write at the same time (to uphold the single-writer, multiple readers paradigm). In this way, the bus essentially serializes writes/reads/transactions across all processors. The arbiter of the bus will essentially "lock" the bus and only allow one cache controller to send messages a time, ensuring consistency of the order in which messages are received across caches/processors.