@ishanguar, I think that's exactly correct. This is a downside to the static rule of always allowing a committing transaction to take precedence. You could imagine implementing some notion of fairness by overriding this heuristic if the task has been overridden N times where N is above some threshold.

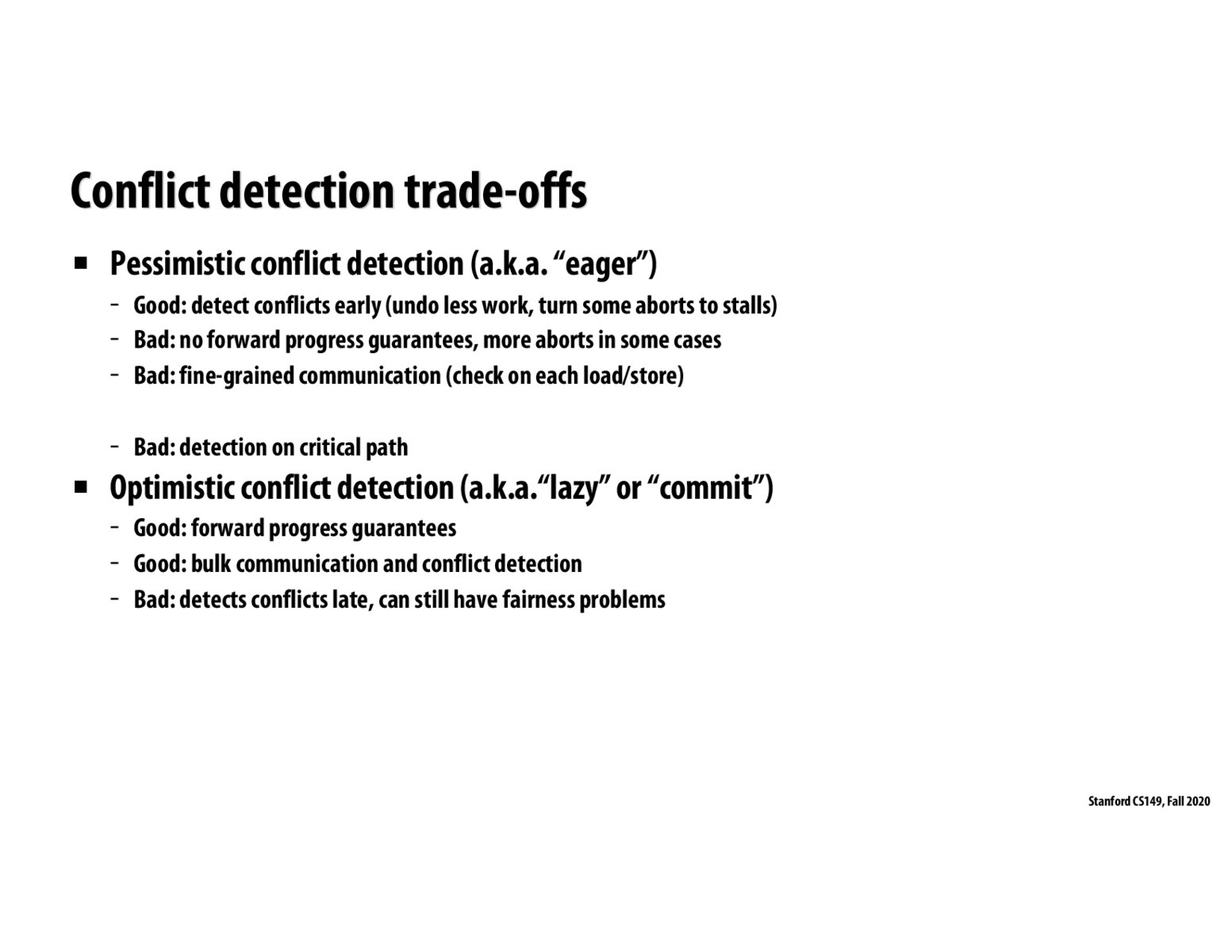

Building on @ishanguar's observation, I wonder if compilers can optimise for the best conflict detection mechanism depending on the size of the transaction. For instance, if a transaction deals with a large portion of memory or there are many threads reading/writing to the same set of addresses, the compiler could opt for pessimistic detection since conflicts will be more likely to happen. And similarly when transactions are small or run on few threads, the compiler could use optimistic conflict detection.

I was just curious whether one of the two is generally better in some kind of standardized setting, and found an interesting paper: https://www.cs.rochester.edu/u/scott/papers/2006_DISC_conflict.pdf Seems like, as mentioned above, some heuristics can help the performance, and of course, since the implementation is static the result varies with the type of the dataset for testing. Wasn't really able to fully understand the paper but the result looks interesting.

Please log in to leave a comment.

Can fairness problems happen in optimistic detection because of the size of transactions, ie transactions that touch many areas of memory or take a long time to complete are just more likely to see conflicts with the other transactions completing during its execution/conflict checking process?