As @nickbowman mentioned, the bandwidth between networks is similar to that of local storage(SSD) in modern days. Therefore, the bandwidth of other node's memory would be roughly equal to the bandwidth of the local storage. And because memory has the highest bandwidth compared to the other component, the computation would be faster if all data are stored in the memory.

Even though bandwidth is about the same, wouldn't latency increase by a lot if we use data stored in memory on other machines as opposed to local disk? I supposed that in this scenario running multiple threads in parallel would hide any memory latency incurred by needing to fetch data from other nodes in the cluster.

@tspint Yes, I think you have it right on! Due to having to travel across the network, latency to access data on other machines would definitely be higher than accessing locally, even if the bandwidth is the same. However, as you mentioned, we know a variety of parallelism techniques for hiding memory latency, so it would be easier to work around high latency than low bandwidth.

I wonder if network bandwidth depends on the kind of storage you are accessing. For instance, would it be possible to read from another computer's memory over the network faster than you can read from that computer's disk? Or perhaps even faster than you can read from your own disk? It seems like you could never read faster from another computers disk over the network than you could from your own disk, but it is not clear to me why that limitation exists for memory as well!

If you compare the same storage type on different computers, your own computer would of course be faster than reading from the other computer, since the time to read from memory/disk would be the same in both computers, but then you add the network latency to get the data to your computer. However, if you compare reading from your own disk vs. reading from another computer's memory, the latter could be faster! You could have data already residing in the other computer's memory, so that reading that data takes (memory latency + network latency) < your own disk latency.

Please log in to leave a comment.

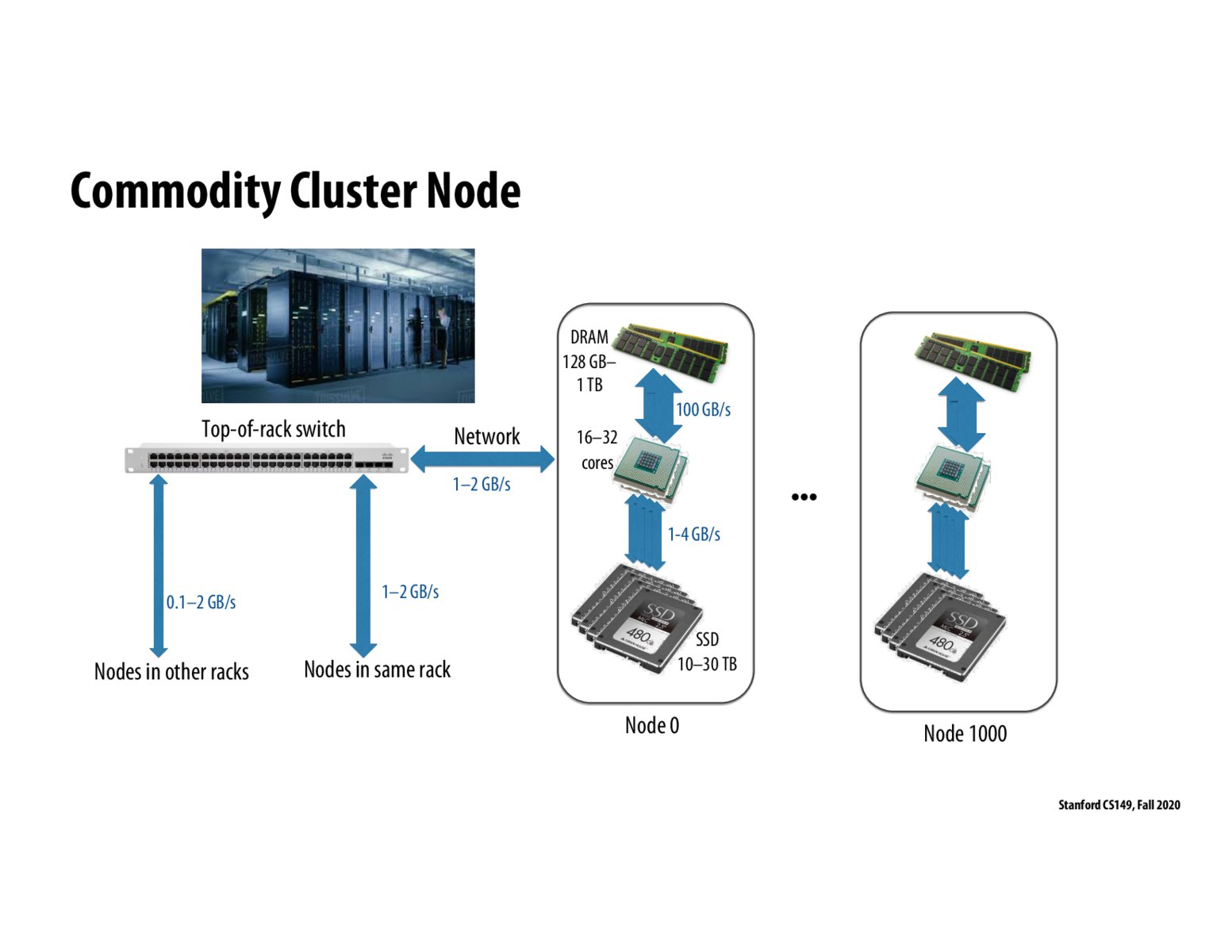

One interesting thing to note from this diagram and associated specs is that the bandwidth to SSD is about the same as the bandwidth to the nodes in the same rack and other nodes on the network, which means that sometimes if you could store a dataset in main memory across many machines, it might actually be more performant to do calculations and data processing on data in main memory on other machines than data stored on SSD on your local machine.