@suninhouse. Distributed computing (a la Stanford CS244: http://www.scs.stanford.edu/20sp-cs244b/) encompasses many aspects of using many machines to solve problems. In addition to parallelism, scheduling, and improving performance, issues include topics like security, privacy, tolerance to failures of some machines.

In CS149 (with the exception of this lecture) we typically don't talk too much about what happens if a user is trying to DDOS your system, or one happens if one of your processing nodes goes down. (For example, if one of the cores in an Intel CPU fails, you throw out the processor and gets a new one.)

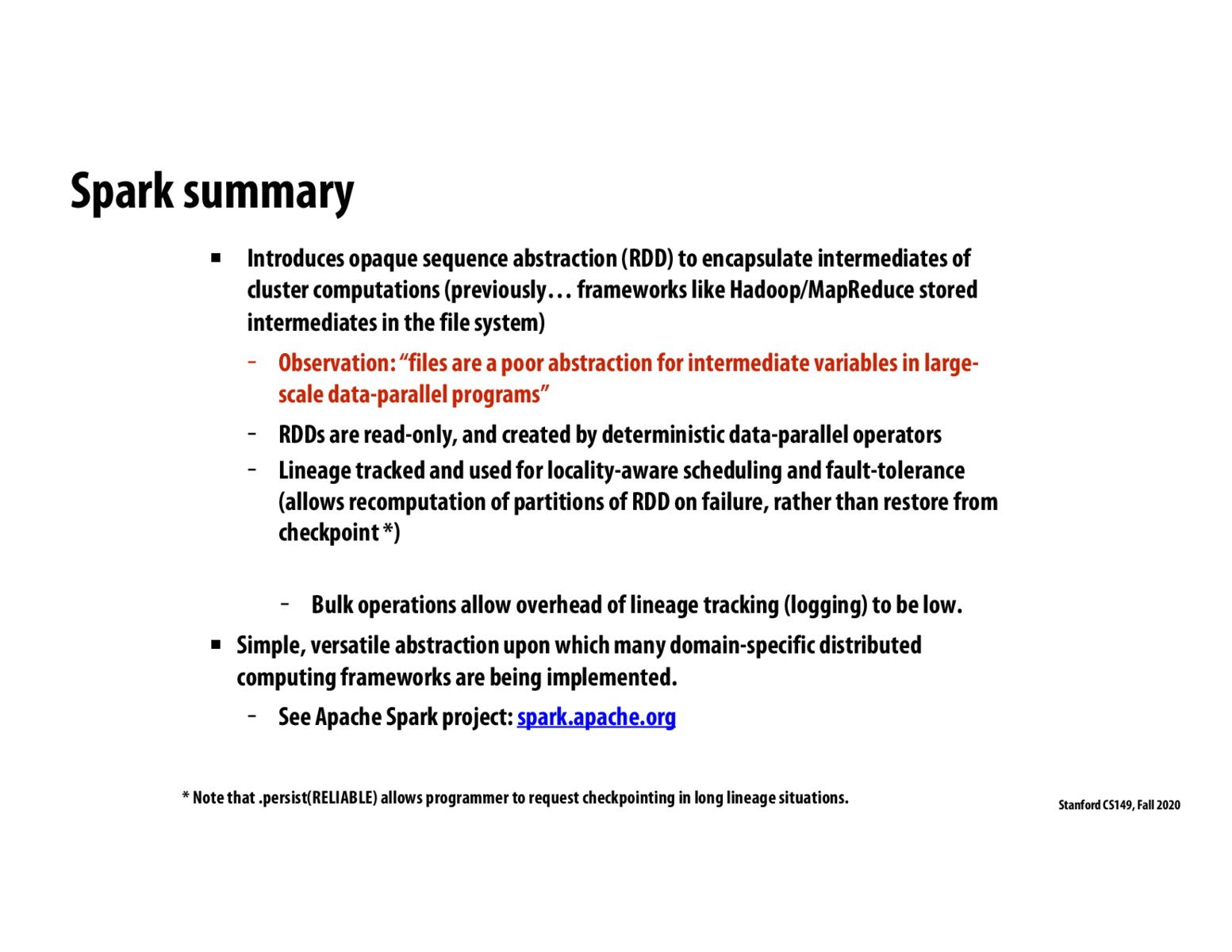

My understanding of Spark is that it is particularly good at handling the intermediate data because RDDs are simply a more purpose-built storage container for intermediate data that is being used for distributed computations than normal files, and one of the reasons for this is that it is good for keeping this intermediate data in memory. This leads me to a few questions about potential limitations of Spark. Say you want your intermediate data to persist for additional analysis later, does Spark operate well for this task, where RDDs are effectively being asked to replicate the purpose of a traditional file? I noticed that we have mostly been talking about the intermediate data when talking about how Spark has been optimized. Why are the initial or result datasets less important for optimizing around? Is there an assumption baked into this that the initial dataset isn't in any way a form of intermediate data, meaning that it is simply read once and pushed out to workers?

Please log in to leave a comment.

This is a high-level question: what is the relationship between distributed computing and parallel computing? Is it fair to consider distributed computing as parallel computing that involves clusters of machines?