Intuitively this reordering will take more iterations until convergence. I guess there is an optimal balancing between the amount of parallelism and the convergence speed.

How did we figure out that this is another solution to the problem?

I also think @ruilin makes a good point, it seems like this would require more computations to figure out, but maybe the benefits of parallelism are so massive that they outweigh the downsides.

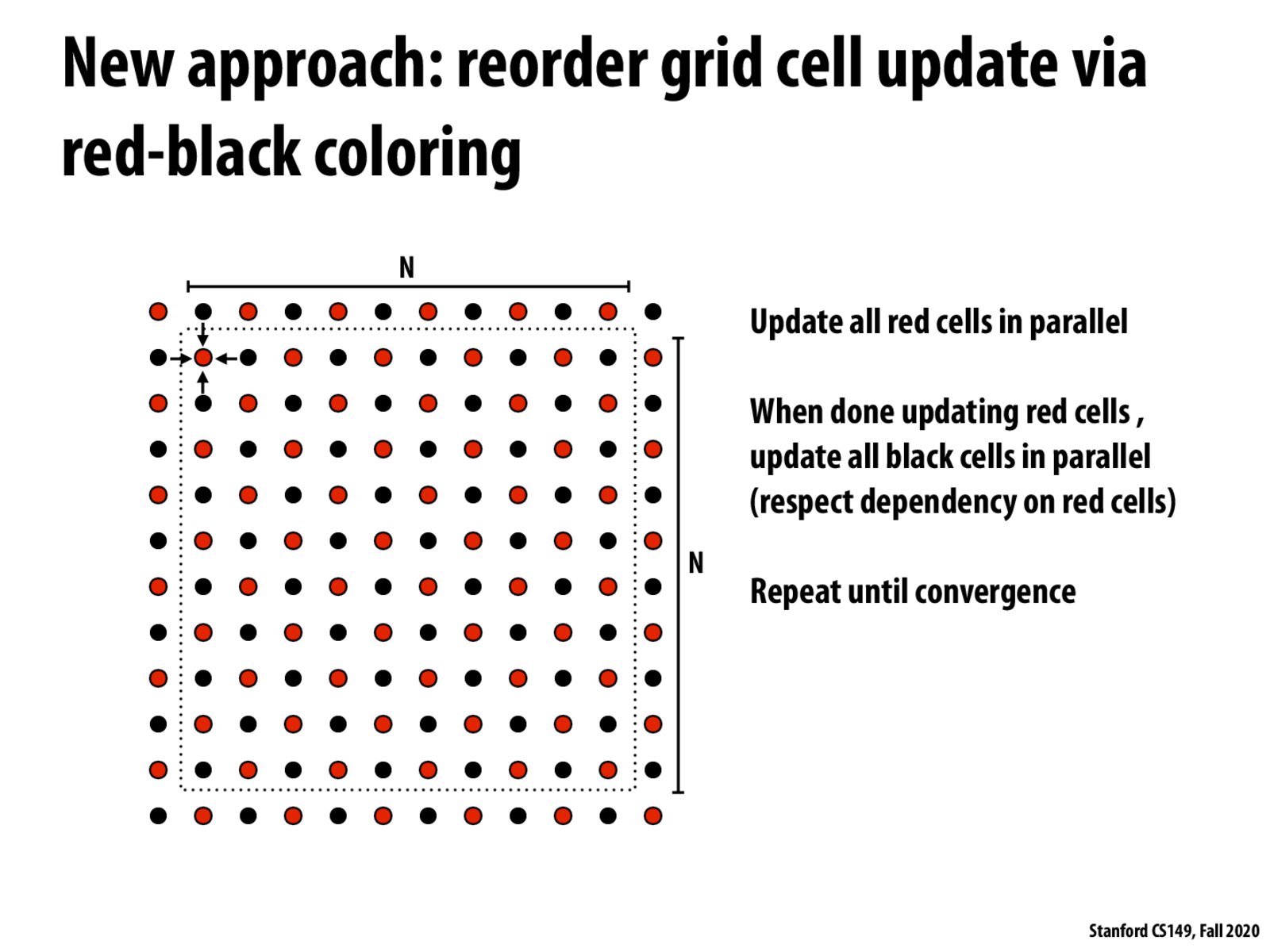

There are alternatives of ordering to allow parallel computing, for example, instead of partitioning the whole image into red and black dots, we could use something called “block red-black ordering.” In this method, nodes are divided into several blocks, and red-black ordering is applied to the blocks. This may achieve a high parallel speed-up rate due to fast convergence.

Reference: https://link.springer.com/article/10.1023/A:1021738303840

Going off of @ruilin's point, are there standard techniques or software used for figuring out how to optimally balance parallelism with convergence speed, or is ad-hoc analysis how this is done typically?

With this algorithm, I think the values of the red nodes are ignored? They all get overwritten as the average the neighboring black nodes, and then the black nodes are updated according to these new red nodes, i.e. each black node B is the 4/16-, 2/16-, or 1/16-weighted average of the 9 black nodes arranged in a diamond centered at B? If this is the case, it might be more efficient to calculate this operation directly on an array of the black nodes and a temp where they all get simultaneously updated, and we could switch between these two arrays in each iteration.

Hm...I don't think this is computing the same thing as the original sequential program. The sequential program overwrote entries of the matrix in a particular order, but this would be updating in a checkerboard pattern, so overwrites happen in a completely different order. I assume that the PDE algorithm isn't dependent on the order of overwrites so this is mathematically equivalent asymptotically, but it's not actually the same program (i.e. there are different results).

I agree with @sasukelover420's idea here, because the original exact algorithm is difficult to parallelize without using additional memory, (i.e. update in-place), we use this approximation algorithm instead to utilize the power of parallelization to speed up the algorithm, even though it takes more iterations for this algorithm to converge, it still finishes in a shorter time in most cases.

To add on to other points that intuitively, this does seem equivalent, it also seems to me that it wouldn’t be equivalent asymptotically, as the output of the program would be entirely determined by the color whose average is taken first. Maybe a way around this would be to double the dimensions of the grid such that each point is replaced by 4. Then we can assign the colors. This way half of each original point’s data is updated per iteration, preventing half the data from being ignored. A downside is of course that this quadruples the work to be done

Please log in to leave a comment.

Is there memory access overhead for this algorithm since you wouldn't be loading contiguous chunks?