Let's say we load 0x0 into cache and then some other thread goes and changes the actual value of 0x0. How do we make sure our cache is up-to-date?

@kaiang machines are required to follow cache coherence and memory consistency protocols to keep caches up-to-date after reads and loads. More info here: https://www.morganclaypool.com/doi/pdf/10.2200/S00346ED1V01Y201104CAC016

One question that is answered in class: when we write an address in memory, we first load it into cache, write in the cache. When we evict the cache, we will save it into memory, so that in total took 2 steps. That is called write allocate policy.

My question is: if it took 200 cycles to load from main memory to cache, does it also take 200 cycles to write to main memory from cache?

When fetching data from memory, do caches always pull exactly the size of the data they are fetching? I know that caches can prefetch extra data for efficiency, but what about the case where a piece of data is smaller than a cache line?

For example, say a cache needs to fetch a single character from a string from memory, but 4 characters fit in a cache line. Would a cache fetch that character as well as the next 3 characters to fill the cache line?

We assume least recently used eviction is good, but it seems there are many cases where this is far from optimal (eg a loop that uses an array just over the size of the cache). An alternative might be some kind of prediction scheme that tries to estimate future usage without just looking at most recent usage -- eg we can analyze a pattern of caches to predict program behavior (or update cache behavior based on programs). This seems quite tricky. Apparently there's a huge array of types of caching algorithms: https://en.wikipedia.org/wiki/Cache_replacement_policies.

How is the Least Recently Used Cache Line determined? Does this mean there has to be a separate place in memory where we keep track of the LRU line in each Cache?

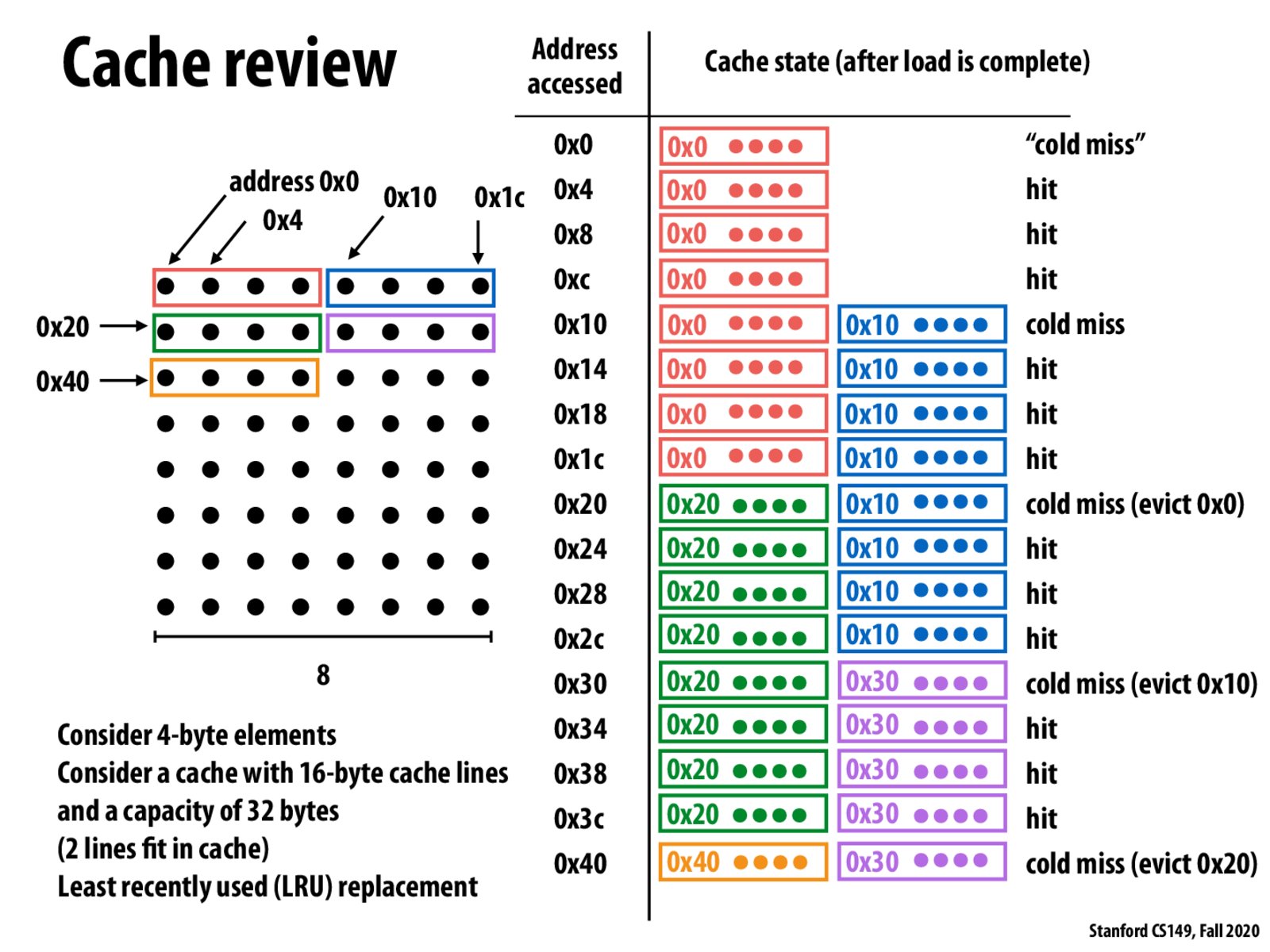

Just to make sure I understand, in this example, would caching help reduce the number of individual memory accesses by 3/4?

@cbfariasc Yes, I believe that is the case here. We are accessing the cache for every hit in the example and only need to access the main memory 1/4 of the time.

I agree with what @lexicologist said. Apart from that, sometimes we want to use the "volatile" keyword, in order to enforce threads to always read from memory. This is a common way to make the code thread safe.

Just wanted to respond to @jlara's question that kind of got lost. Yeah, cache lines are fixed sizes (for a given processor). You have to read in increments of this size. This effects of this "feature" can be see in programming assignment writeup problem 5 part 2. It's just like how if you wanted to read a single bit from memory, you actually end up needing to reading 7 of it's brethren because RAM operates on the granularity of bytes, not bits.

I was also curious about @andykhuu’s question and gave it a search. It seems like the way it’s determined/implemented is relatively understandable using a hashmap and a linked list to model the data structure. In short, new cache hits get moved to the front as well as newly loaded data, Unused data moves down the list and data at the end of the linked list gets evicted. But as @mvpatel2000 and my stack overflow links points out, there’s many implementation strategies. I’m not sure where keeping track of the cache algorithm happens though—maybe someone else can speak to that.

https://www.interviewcake.com/concept/java/lru-cache

https://stackoverflow.com/questions/23448528/how-is-an-lru-cache-implemented-in-a-cpu#:~:text=For%20a%20traditional%20cache%20with,be%20used%20to%20track%20LRU.&text=The%20final%20bit%20is%20set,an%20LRU%20hit%20had%20occurred.

@jt Can you clarify what you mean by using keyword volatile to make code thread safe? As far as I know, volatile in c++ is not an effective synchronization mechanism.

@kaiang Caches are implemented with various coherency protocols . For your particular case if the load from 0x0 followed by the the store to 0x0 occurs according to program order (maintains consistency) then everything should be fine. If load was meant to occur after the store then a cache protocol such as MSI (https://en.wikipedia.org/wiki/MSI_protocol) would mark the 0x0 cacheline as invalid thus requiring the processor to fetch the modified line from main memory.

One thing to note: if the cache is smaller than the data that a program is scanning through, then it would be as if there was no cache at all.

Please log in to leave a comment.

something that took me a while to see was that each line of the table on the right represents a separate cache state (rather than the entire table representing a single cache state).