@ChrisGabor This is a really valuable insight. I have never looked at memory this way and I always thought that using local memory for intensive tasks was better than using other networks.

@ChrisGabor Is network bandwidth equal to the rate at which the end host can ingest the data, though? It seems like even if there was infinite network bandwidth, the data flow should still be bottlenecked by the ability of the receiver to read that data into its own memory. Also, I think that you'd have to assume the distributed data is also immediately available in memory of the other machines. Otherwise, even if you request the data, the server would have to read the data out of their own disk as well.

I think @jchen's point about servers having disks too (nobody's perfect I guess) that they have to read out of is an important one. At the end of the day, program will run faster if the data is available on some sort of fast memory device. What @ChrisGabor is saying is operationally true enough because servers generally have large amounts of RAM (at least compared to our dinky little laptops). The speedup of networks is due to the fact that there is a larger amount of RAM in total dedicated towards a particular task, not that receving data on the wire is inherently faster than reading from disk.

Please log in to leave a comment.

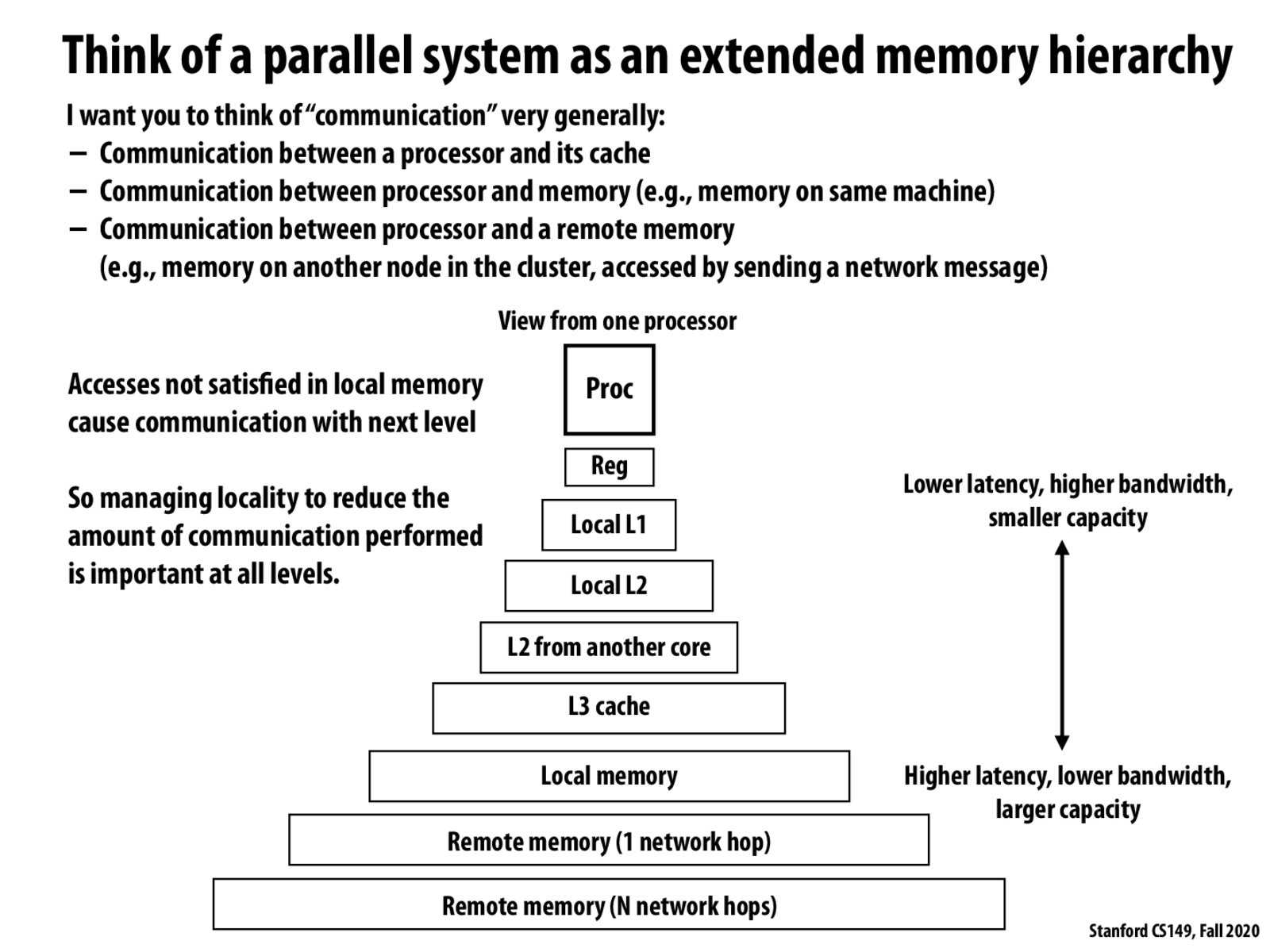

In terms of large scale data applications, I find it interesting that networked memory is faster than disk access times. 10 Gbit Ethernet (1.25 GB/s) is faster than disk reads at 10 MB/s and SSD's at 500 MB/s. The implications seem to be that if you have an application that must use 10-100's GBytes of data (such as training a deep learning model) it might make sense to distribute the work over a network instead of streaming data from a disk.