@Nian, I can give it a go!

I think the first place to draw a distinction is between SIMD and SIMT. SIMD describes an execution model in which one instruction can be executed on multiple pieces of data (hence, 'Single Instruction Multiple Data'). In class we've seen and worked with SIMD programming via 'vectorization' of instructions, where a single instruction could act on a chunk of data in a container (maybe 8 lanes in a vector). This capability is possible for chips that have special ALU's capable of executing SIMD instructions (there are some good visuals from lecture 1 and the lecture 2 review, but idk where the review slides are :(). SIMT, however, allows for a single instruction to be played out among multiple threads in parallel (this is an example of simultaneous multithreading). This is only possible on a single core if the core has multiple contexts to support multiple hardware threads, and if the chip has the appropriate logic units, it would be able to identify the SAME instruction in each thread routine, and execute this instruction simultaneously using the multiple ALU's. It really is 'single instruction' on 'multiple data', but the important distinction here is that the instructions are all coming from independent instruction streams. SIMT really hits its stride in GPU's where you might want to split up a routine into multiple threads, but the work you do is fairly repetitive.

Let's step out for a second now. SPMD (Single Program, Multiple Data) is simply a paradigm that can be used to achieve parallelism, characterized by the splitting of work into smaller pieces and running the pieces in parallel from a single program -- importantly, the work is frequently split among multiple processors. Note that SPMD off the bat sounds broader than SIMD and SIMT -- SPMD allows for multiple threads to be running tasks, with their own instruction pointers, whereas in SIMD and SIMT, the kicker is that the exact same instruction is being executed multiple times -- SIMD/SIMT can't magically do different operations simultaneously. I think the confusion with how SPMD plays into everything is that these terms were conceived at different times by different people, and they have different scopes. SIMD and SIMT define execution models, where both programming and hardware must be specialized to parallelize, whereas SPMD is a much broader classification -- it's really just a program descriptor.

One last example to contextualize: ISPC is a specific programming language and runtime that allows users to (in Intel's own words) "write SPDM programs to run on the cpu." Yes, it's true that ISPC has the capability to convert loops into gangs of program instances that utilize SIMD instructions to parallelize your code, but ISPC programs are simply certain applications of SPMD programs. Hopefully that's a better example of how these paradigms / abstractions separate implementation from general behavior!

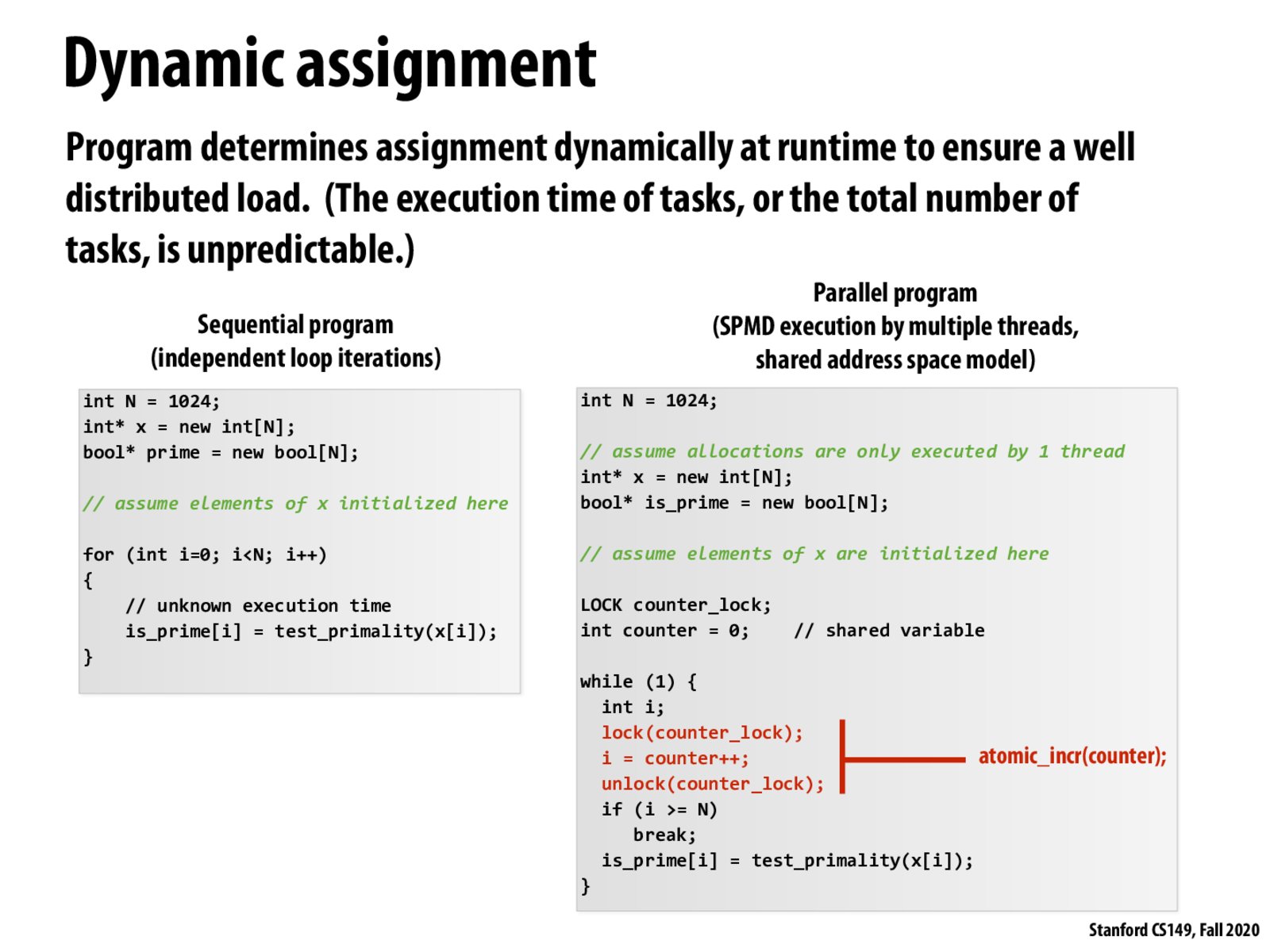

The downside to dynamic assignment is that it requires processing elements (whatever they may be) to communicate with each other, which can result in nontrivial slowdowns.

I am a little bit confused on the inherent disadvantages of dynamic assignment. I totally understand the task size discussion in the later slides that with a proper task size, we can reduce the sync cost. But static assignment also have the sync cost, for example, when they change the global variables they have to acquire the lock. Or are we talking about the sync cost with the work queue, which static assignment won't have?

@icebear101 I don't think static assignment will need a lock because every part is independent. A thread doesn't need to know about other threads' status in order to complete its work.

@haiyuem Actually for the grid solver problem, you do need information (e.g. boundary values) from other threads. Therefore time cost on acquiring locks/sync process could still exist on static assignment. I think @icebear101 the overhead may come from stealing back and forth from the work queue (in Silk) or an additional thread to distribute tasks to workers (in threadpool).

@kevtan made a good point. We saw with OpenMP on assignment 4 that dynamic assignment would actually hurt performance, especially if the amount work in the loop is small.

Please log in to leave a comment.

I am still confused about SPMD,SIMD,SIMT? Is there anyone that can explain the difference for me?