@bayfc I think this is a good example of the concept that writing optimally efficient software boils down to 1) the computational algos used in the tasks (think complexity analysis) as well as 2) the underlying hardware the tasks run on (think all the stuff we talk about in this class). There are certainly many aspects of a program that you can refine beyond the optimal complexity of that program. For example, optimizing data operations to be cache friendly, tweaking the algos to achieve a higher arithmetic intensity, or using core pinning.

I would think that in an SPMD model implementation -- regardless if it is an arbitrary multithreaded program or an ISPC compilation -- these two points are prioritized.

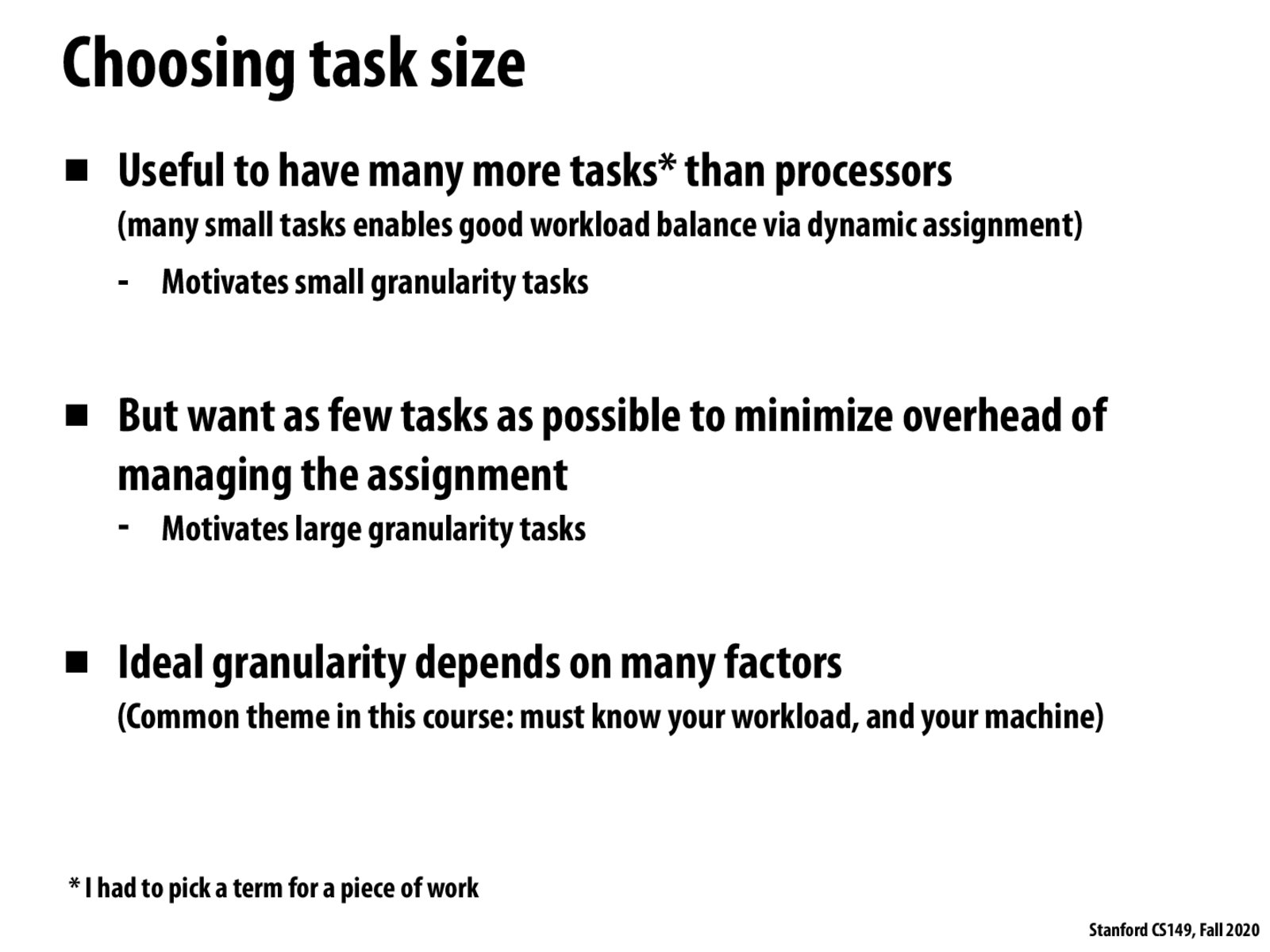

I think that whether we can choose an optimal size beforehand would depend on the problem we are solving. Assignment one is a clear example where us knowing about the problem made us decide to assign interleaving tasks instead of blocks of work. I think that by knowing the problem you can guess a near optimal task size, and then test it empirically to get a more accurate task size with higher optimization.

Please log in to leave a comment.

Are there any good methods to choose optimal task size without just testing empirically which is fastest? Although for some applications this works fine, I would think that for implementing certain parallel programming models it would be necessary to make some predictions about optimal task size, like in an SPMD model, whatever implementation exists would have to divide the work of an arbitrary program automatically.