I think GPUs initially were NOT capable of computations as general as they are today. The first people who leveraged GPUs for non-graphics had to use a "hack", and I imagine this hack did not leverage the full power of the GPU. It seems like Nvidia made an intentional decision (perhaps reflected in the many architecture changes since then) to support more general programming.

http://cs149.stanford.edu/fall20/lecture/gpuarch/slide_27

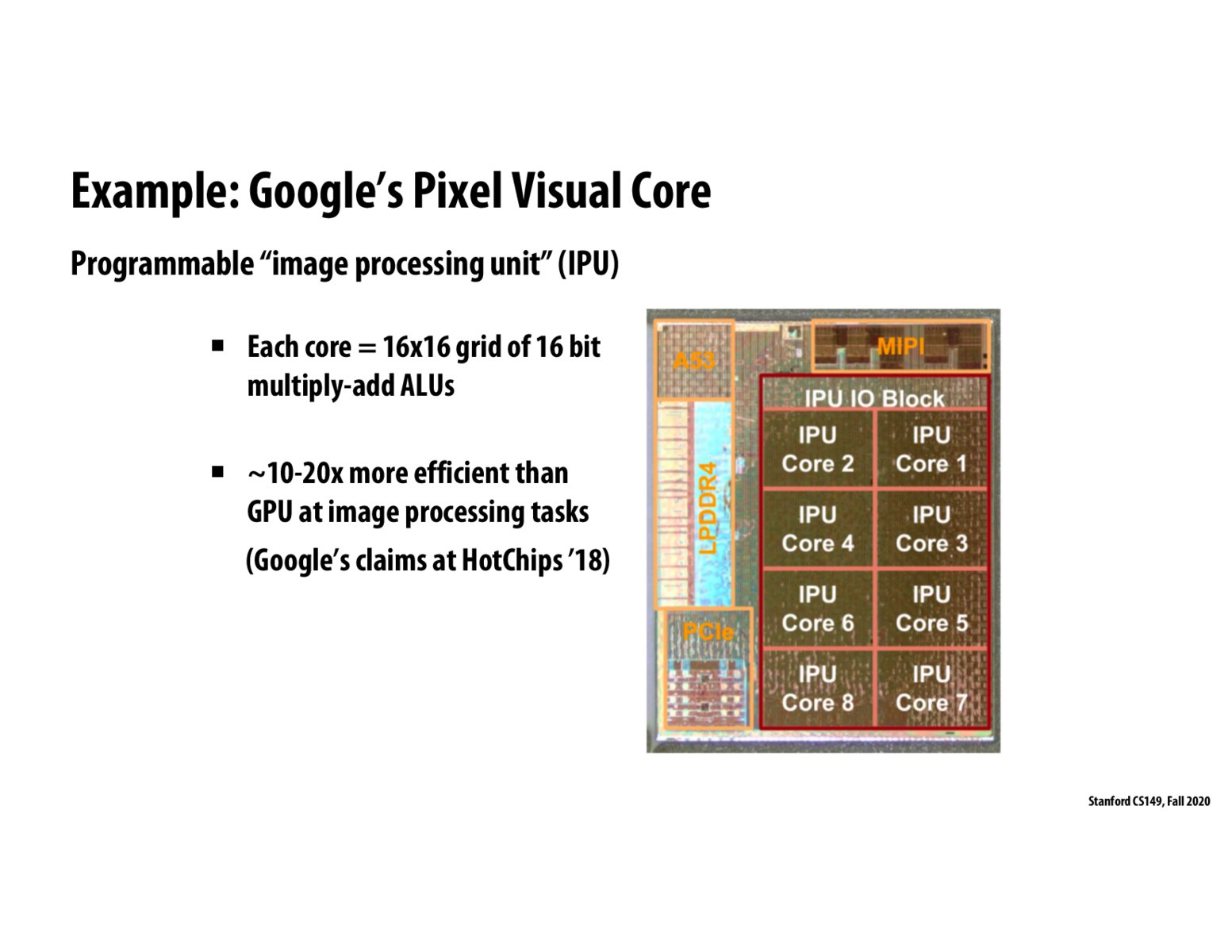

I think @fizzbuzz is making a good point which I'm also interested in. It seems that the development of GPU we talked about in class was to generalizing the programming pattern - making it easier to program. However, in order to improve the energy efficiency, we have to go back to domain specific designs.

@fizzbuzz Currently GPUs (at least NVIDIA GPUs) are designed for both graphic and compute workloads, handling them with the same HW pipeline, but the operations are slightly different. For example, there are a few HW units that serve as important resource managers when graphic workloads come, but simply bypass the work to downstream HW when compute workloads come. You can think of it as a switch between the two sides.

I think it makes sense for the same chip to support both graphic and compute applications, because they both require huge computation power, and in most cases the HW components can be reused. In addition, nowadays, an increasing number of graphic applications also include compute workloads; NVIDIA is also adding a new feature to GPUs to allow compute and graphic workloads (warps) in-flight at the same time. Overall, it's a commercial decision, and I think it must be better (cheaper/more efficient) for users to have a single card to handle both applications, compared to one card for games and one card for ML trainings.

By the way, for each generation of GPU architecture, NVIDIA will offer two versions of the chip, one is optimized for compute, the other is optimized for graphic, targeting at different audience. I think that partly solves the problem.

Please log in to leave a comment.

From what we've learned about the history of GPUs, it seems like their generality was almost an accident—they were intended for graphics processing tasks but ended up being useful for lots of other things. Does this mean that GPUs, as we know them now, are too general, and should be replaced wholesale with something more specialized for graphics?