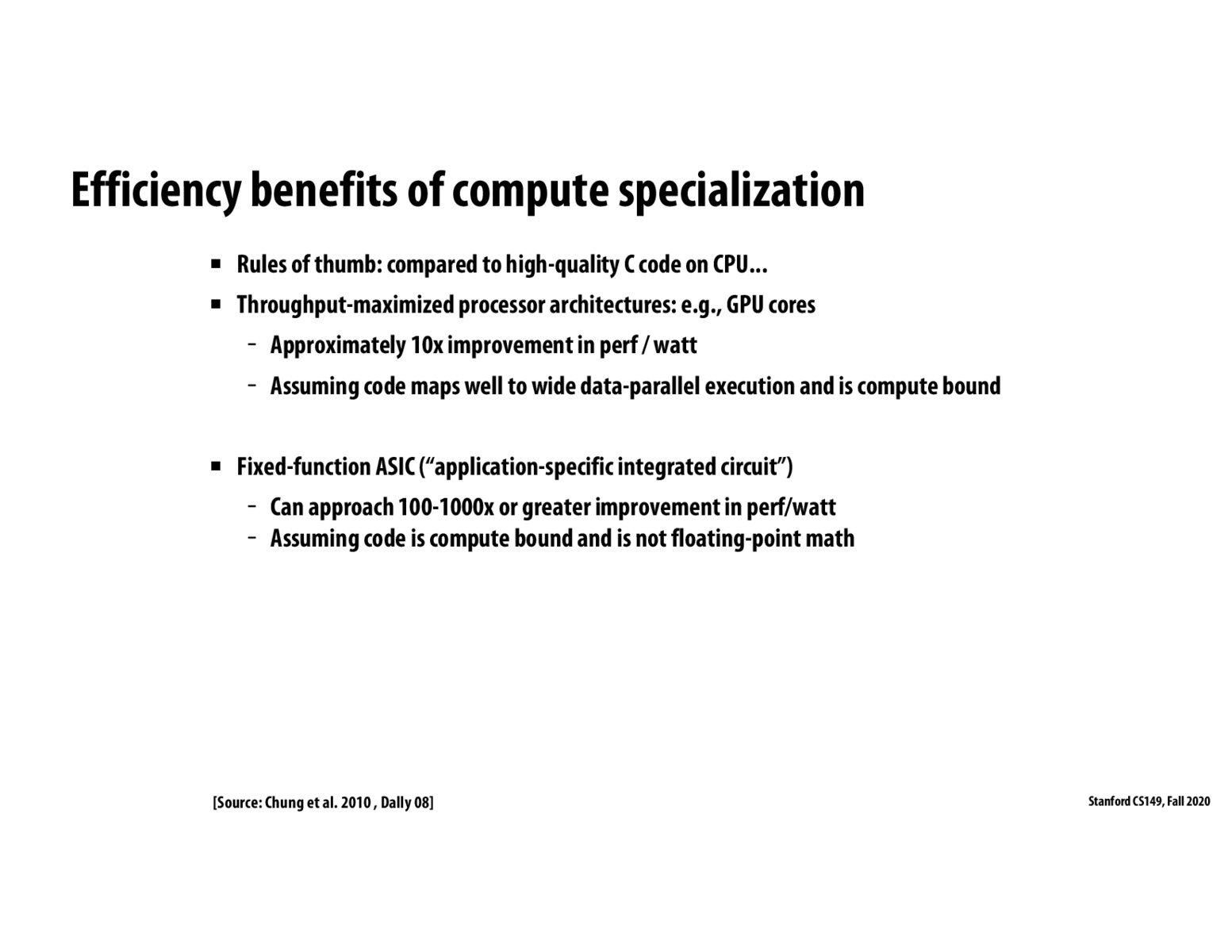

The major reason for these massive differences in perf/watt is the differences in overhead. Because CPUs are meant to be general purpose in what they can compute they also by definition need to maintain the most overhead in order to handle all of the different cases as well as to identify the current operation. GPUs on the other hand come with a host of assumptions about the type of operations being performed (a single operation can be performed across an entire warp simultaneously, for example). This can be even more true for ASICs, as they simply impose more and more limits on the TYPE of computation that can be performed.

I'm a bit confused by the last bullet point: Why can't the ASIC do floating-point math and still get significant energy improvements? I know that floating point math is much heavier than integer math (I implemented floating math in verilog once), but I don't think this would significantly impact the ASIC's performance relative the CPU.

@dishpanda I think it is about the relative amount of overhead. Doing integer math on a CPU may result in 5% of power going into the actual math operation, 95% going into instruction F/D and other overhead. In this case switching to ASIC and removing most of that overhead gives you a huge efficiency improvement. On the other hand, doing floating point math on a CPU may result in 50% of power going into the actual math operation, 50% going into instruction F/D and other overhead. In this extreme case, the CPU is actually not too inefficient, so the improvement from switching to ASIC is less.

Please log in to leave a comment.

Should we characterize hardware features like Gazelle and some of the recent Javascript execution capabilities in mobile Apple processors as specialized hardware? It seems like a similar idea, and similar improvements but Javascript feels like a general application space to me.