@mvpatel2000, it kind of seems that the general modern trends in hardware design that we've talked about (specifically with regards to individual logic units in things like GPUs and CPUs) is to take common use cases and build dedicated hardware. So, I would speculate that generally computers that are expected to make use of transactional memory are going to be massively hardware-oriented in implementation.

However, I wonder how this scales out to different computing use-cases. Based on my reading, it seems that most commercial programs do not make use of transactional memory at all. Therefore, for use cases where we are hardware constrained (mobile chips, etc.), maybe in these cases software transactional memory would make more sense? If we do not, for example, have the physical size to be adding hardware units to support this apparently niche use case? Or how about in applications where we perform a lot of I/O where we can't 'undo' an operation, therefore rendering transactions useless?

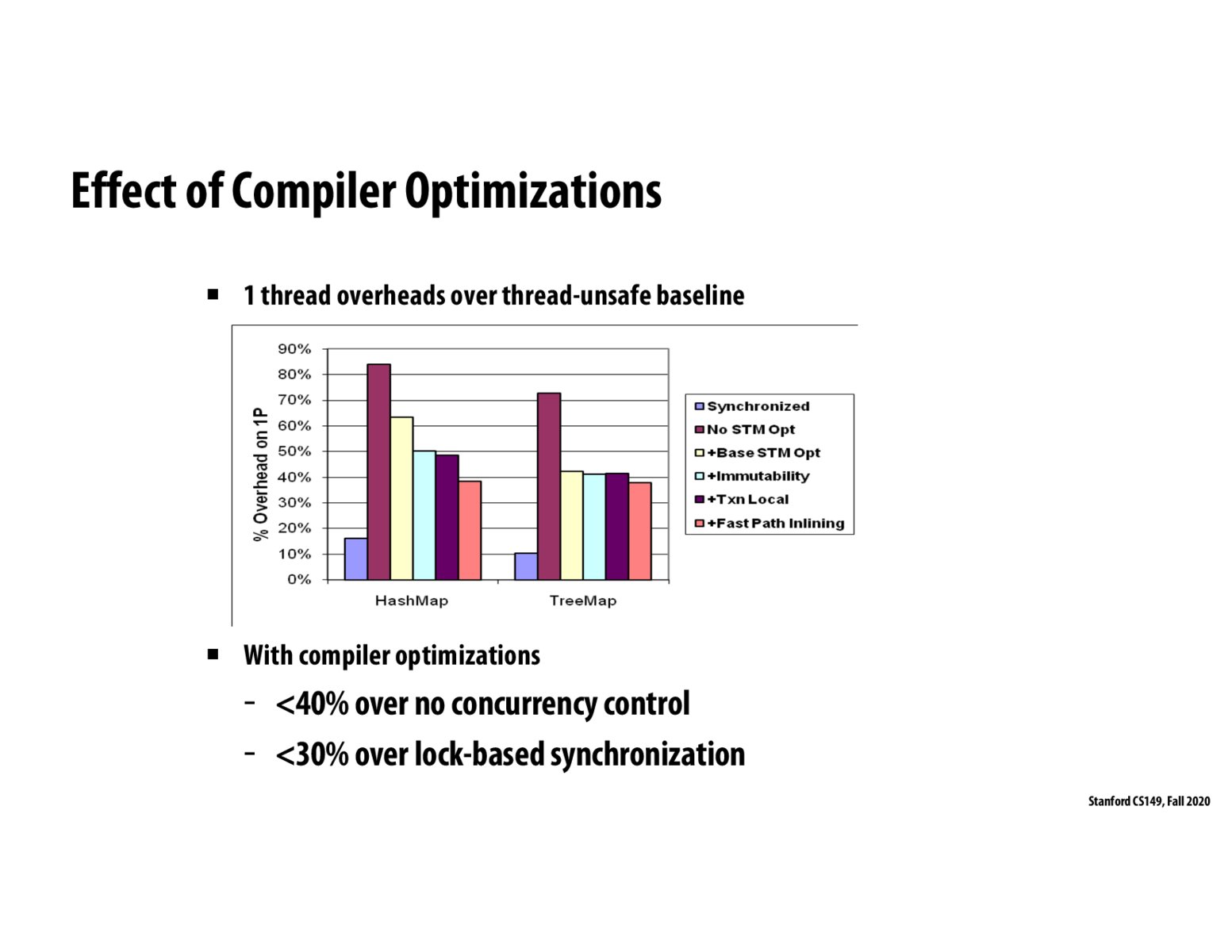

@mvpatel2000 Important note: the diagram is from single-threaded testing. I think TM will perform much better for larger thread counts (normal sync methods will have larger overhead than shown in the diagram as threads "fight" for the same lock as shown in http://cs149.stanford.edu/fall20/lecture/lockfree/slide_21)

Please log in to leave a comment.

It seems like the overhead of software transactional memory is huge. Is there a link to the paper or source of this data? I wonder if there's still more ongoing research on optimizing purely STM systems or if this line of research has mostly stopped due to better performance of hardware based systems. It seems like they're not really feasible at all beyond educational purposes given the vast performance difference.