It's worth noting that fine-granularity conflict detetion has a higher space overhead because it simply requires keeping track of state for more things (a lock/version number per every field you want to track, for instance). Those take up space, and can really impact the memory requirements of a program.

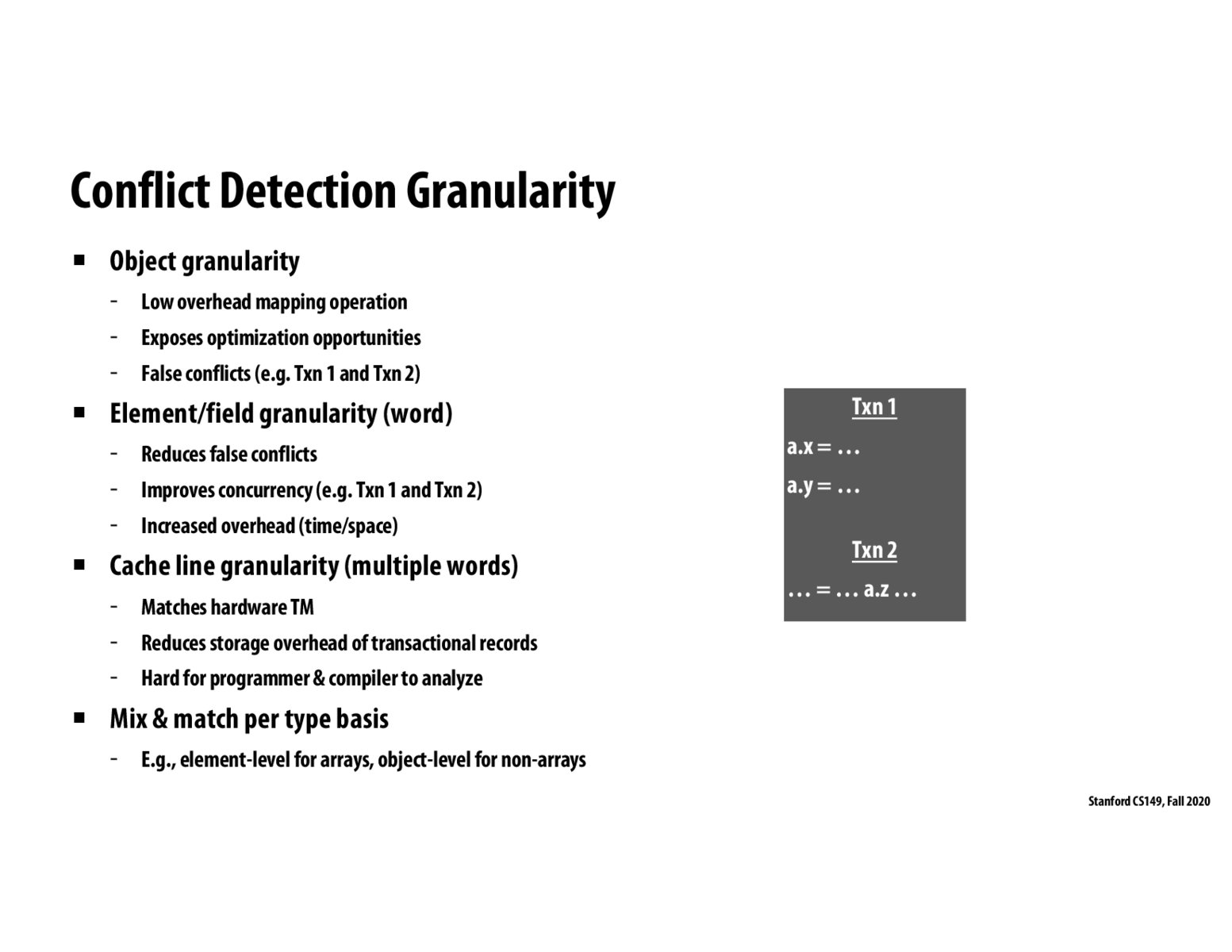

False conflict is similar to false sharing. It happens when processors in a shared-memory parallel system make references to different data objects within the same coherence block, including unnecessary coherence operations. Also notice that large granularity conflict detection is a form of artifactual communication.

To sum up, there are tradeoffs depending on the size of the granularity for conflict detection. Finer granularity (element/field granularity) prohibits false conflicts and improves concurrency but it increases overhead that requires more space & time. On the other hand, coarse granularity (object granularity) has lower overhead, but more frequent false conflicts might happen. One can mix & match per type basis in order to optimize the size of granularity for conflict detection.

In the mix and match protocols, does the programmer or the hardware usually decide the level of granularity?

In other words, does the system say: "all arrays will always be element granular, other objects will be object granular".

Or does the programmer say: "hmm this is a small array that I'm not usually accessing at the same point in the program, I will make this array object granular to avoid incurring the overhead. But for this other array, I want it to be element granular"?

To recap, the main benefit with field level granularity is the fact that it reduces the chances of false conflicts which are more common when using object level granularity. This could be seen in the example in which two threads of execution are modifying different disjoint fields for an object and when occurring in a system with transaction records created at the object granularity would result in a conflict whereas a transaction records at the field granularity would not encounter the problem. Note that the decision between the two granularity also comes with tradeoff of additional overhead(due to more transaction records existing)

If the programmer can be certain that false conflicts are not an issue at the element/field level (for example, if different fields are never accessed concurrently by design), then it is strictly better to use object granularity than field granularity. Cleverly examining the requirements of the program can sometimes allow you to avoid a supposed drawback altogether.

What does it mean by "exposes optimization opportunities" in object granularity?

Please log in to leave a comment.

We see here that in the event that there is object level granularity, there will be false conflict because Txn1 will try to write to a.x and a.y, while Txn2 will try read from a.z. This could be avoided if we use field level granularity.