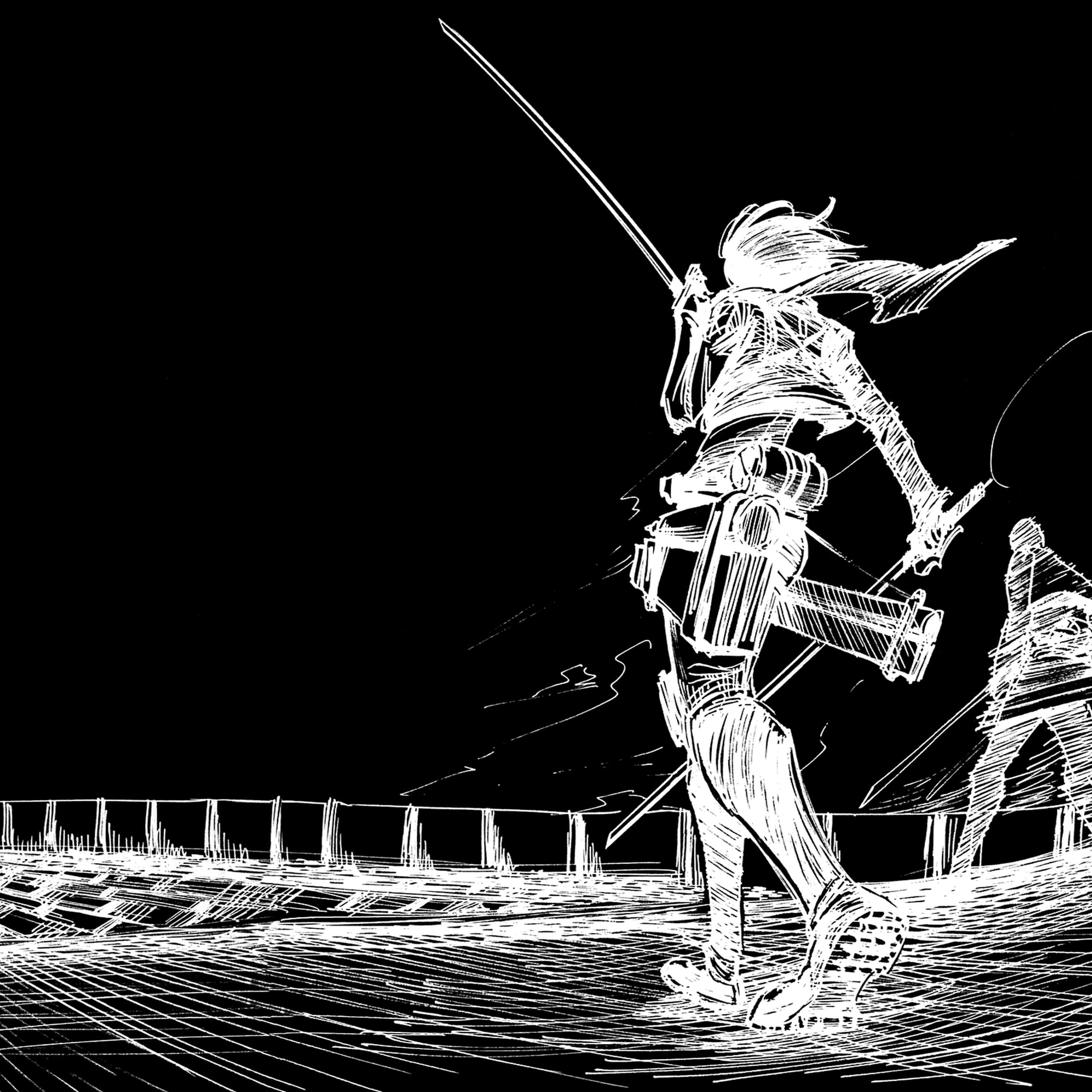

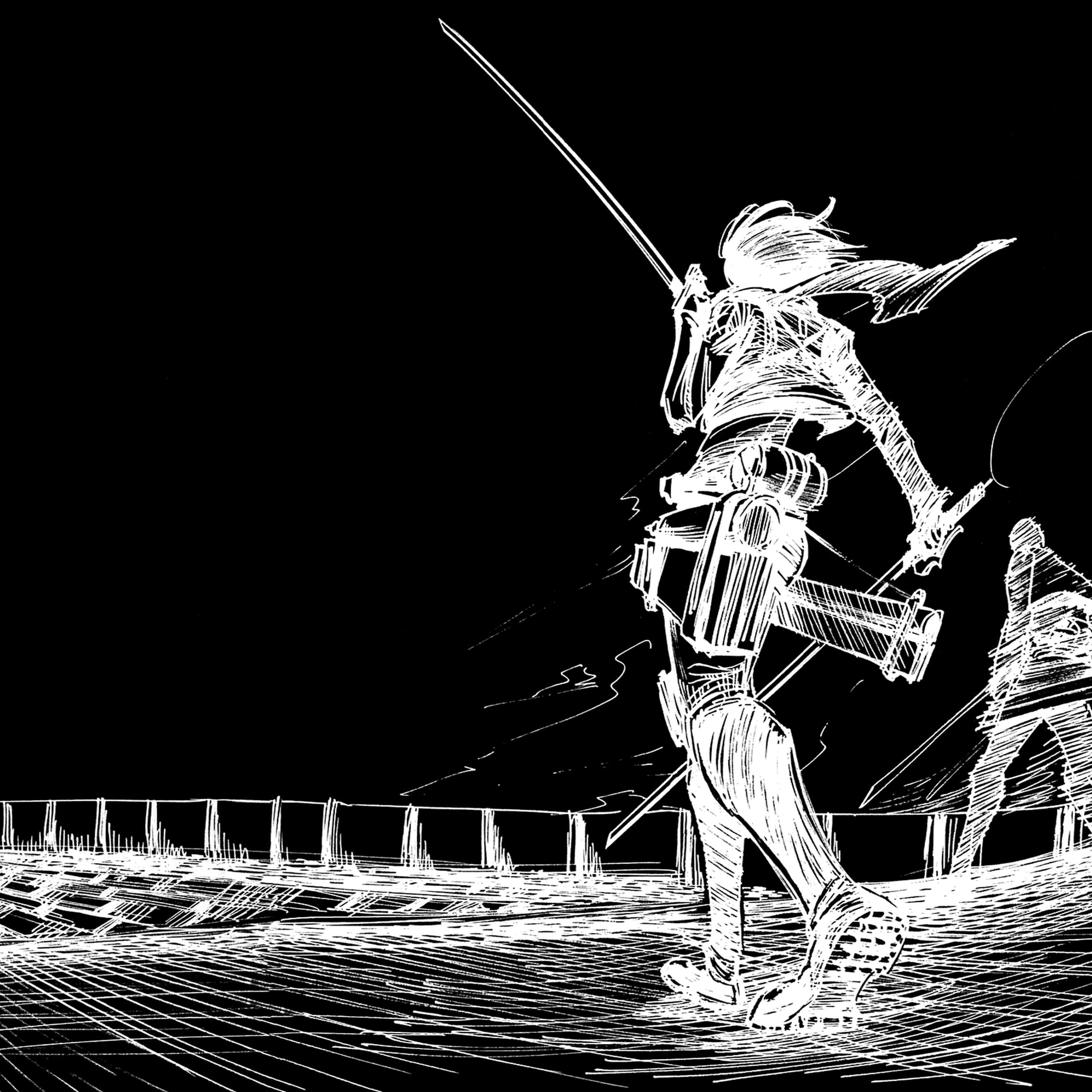

The main takeaway here is that we should never be trying to define a thread block that has more CUDA threads than can be supported by number of execution contexts in hardware, as it could lead to deadlock as shown here (since CUDA doesn't support pre-empting threads). In practice, the CUDA compiler enforces this by causing a compile-time error if you try to define a thread block that is not supported by the available hardware.

The reason we cannot preempt threads to avoid deadlock in CUDA is because we could have over 160k threads and the overhead of implementing preemption on so many threads would be very high.

This convolve kernel cannot progress because we have more threads than available execution contexts and there exists dependencies between threads within one thread block. So we deadlock on syncthreads(). However, If we have use the simpler version of convolve that doesn't require __syncthreads() and doesn't use __shared memory, as shown on this slide, then I am inclined to think that there is no problem. Does the CUDA compiler still throw a compile time error in this case I wonder?

And I don't think Kayvon went over the bottom right fine print. Can someone clarify what this part means? "CUDA semantics: threads in a block ARE running concurrently. If a thread in a block is runnable, it will eventually be run!"

So in this case, if say we have 8 warps on this SM, then mapping 256 cuda threads on this SM would be fine. If we have many SM, would the CUDA compiler try to map this thread block to a single SM (8 warps) or span across two SM (4 warps each)?

Please log in to leave a comment.

Here we should not create threads more than the number of hardware context. Otherwise "deadlock" will happen when 128 threads allocated with hardware threads stuck at __syncthreads while the other 129 threads will never have the opportunity to run on the chip.