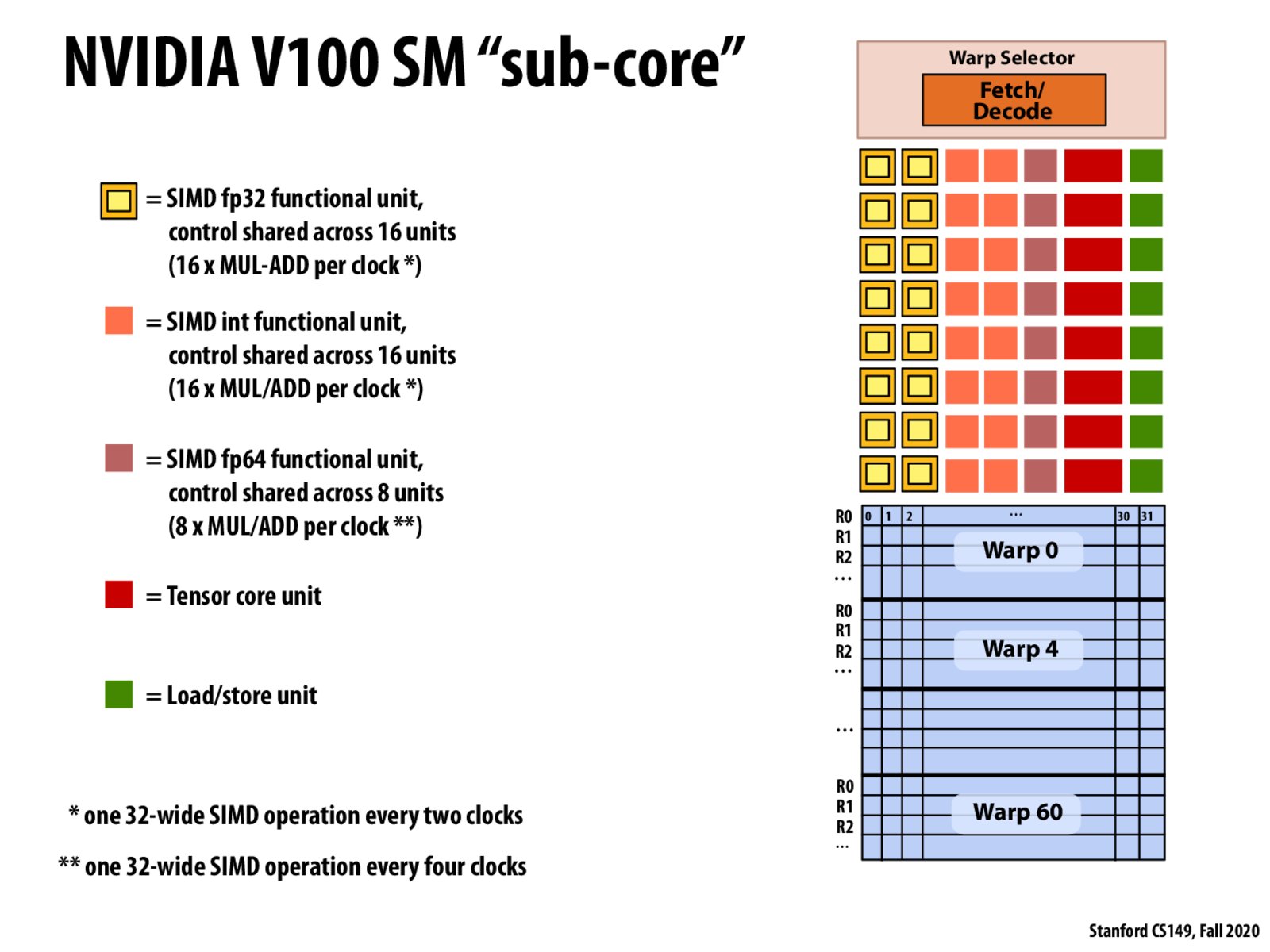

The core pipelines the execution of the 32-wide SIMD instruction by interleaving int and float operations, first issue 16bits of a float operation then 16 bits of an int operation and then repeat to complete thats why it need 2 clocks to complete the 32-wide SIMD operation

To clarify, is each warp able to run a different set of instructions?

I think each warp runs the same set since there is only one Fetch/Decode

Why are warps 32-wide when there are only 16 ALUs? This design decision is hard for me to understand - I feel like it would make more sense for warps to be 16-wide instead.

I think the reason why there is one 32-wide SIMD operation every two clocks is that the 16 ALUs are performing 16 floating-point instructions twice. In the double-precision floating point it takes four clocks because there are only 8 ALUs for double-precision floating-point instructions. Not sure about the design reason, although the decreased ratio of fetch/math operations might allow for the overlapping int/float computations that was shown in the lecture 8, 13:44 in the recording (can't find the slide for that though).

Since we have a many different functional units on a single sub-core, if our program does not utilize all the different data types for different units does that mean that these units will stay dormant? Also, in the total computational capabilities of the chip, does this include all the compute provided by having all these redundant units, or does it just assume a single set of units will be used at a time?

@swknoz, I think your second question is really interesting, because it's kind of like asking about SIMD divergence but instead of diverging on an if/else condition now we are diverging on some threads in a warp processing different types of data: f32/ints/f64.

In mind I think that since we aren't splitting up the computation in terms of one f32 ALU doing an if and the other doing an else, and instead we have one f32 ALU doing something and another int ALU doing something completely different that means that in fact they can run simultaneously. So this would increase the total computational capabilities.

Why do we have the concept of fp32/fp64/int functional units in GPU but only scalar ALUs in CPUs? Also, I often hear about half precision (fp16) on GPUs. Is it supported by fp16 functional units in newer GPUs?

@x2020 CPU also have a separate floating processing unit (FPU). This is why OS generally doesn't use floating point because it will need to store the FPU register, which creates a lot of overhead.

Please log in to leave a comment.

A warp runs 32-wide SIMD operations on a GPU. If our instruction varies a lot for a 32-wide vector and causes imbalanced workload, then there will be huge divergence in each SIMD operation. This is called warp divergence. Usually many branches of the program (if/else, while) will cause warp divergence.