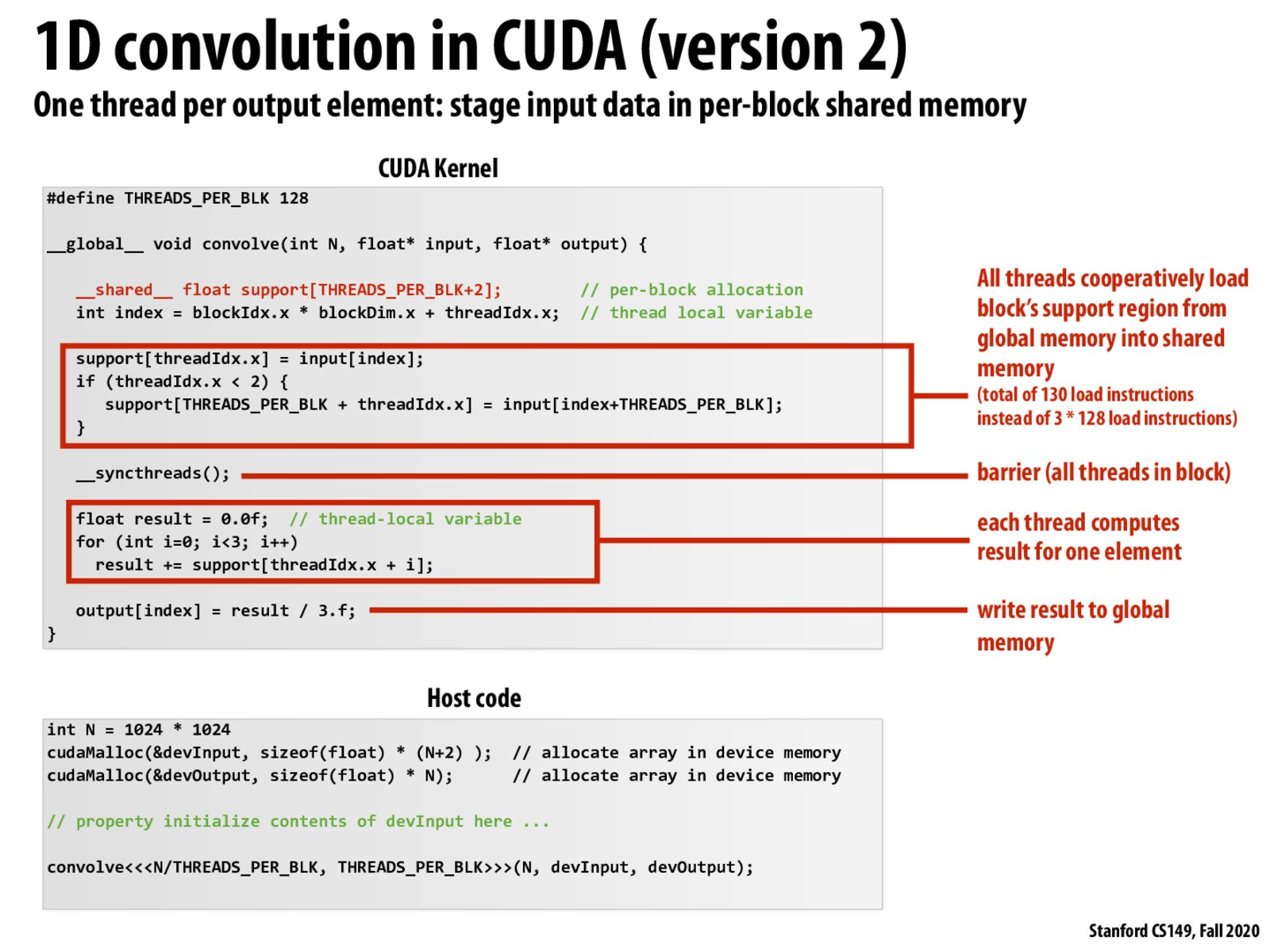

From my understanding, as the same as what @pslui88 said, this version can improve the implement speed. Because this saves the load result on the shared memory, we can complete the following task with only one load for each input. For example, as in the slide, if we use the private memory, we need to load three inputs for each calculation. However, if we use version 2, we only need to load each for all calculation.

Prior to seeing this slide, mental model was that all threads run kernels whenever scheduled and so there is no sort of synchronization. Seeing the "__syncthreads" I think I am wrong: all threads in a block start running the kernel at the same time (allowing them to sync in the middle of execution if necessary), and the next block will not start until all threads have finished. Can someone correct me if I'm wrong?

One key emphasis of this slide is that the shared "support" variable exists in its own address space on the GPU chip rather than on host memory. The memory access for these shared elements is very fast, and each CUDA thread can be more efficient using the shared memory.

On an unrelated note, what is the significance of the last "convolve" line's syntax? Why are three angled brackets necessary?

@jlara From the CUDA C++ Guide: "A kernel is defined using the global declaration specifier and the number of CUDA threads that execute that kernel for a given kernel call is specified using a new <<<...>>> execution configuration syntax." It seems like that line is essentially what kicks off this process.

@dishpanda I think the "syncthread" is to wait for the memory copy from global memory to shared memory to finish before starting the computation.

It is worth noting how the threads cooperatively load from global memory into shared block memory, and notice how the workload of loads are roughly balanced.

Please log in to leave a comment.

This slide shows a more efficient way to accomplish the same thing as the slide before. Whereas before, every CUDA thread loaded from and stored to the device's global memory, this slide takes advantage of the more local per-block shared memory (this is the support array in the slide). Each thread loads in part of the input array from global memory into support. After all the threads do their part and reach the barrier, they each compute their individual results using their own portions of support, then finally write the result back to global memory.