@kevtan Great question! I'm also curious about the answer. I guess there are different kinds of shaders and some of them might be useful for processing vertexes. For example, a "light" shader might be used to throw a vertex out of the pipeline if there's no light on it, so downstream pipeline won't have to render an invisible vertex.

@kevtan, this is because in real time rendering in graphics, there are two kinds of shaders (vertex shader and fragment/pixel shader), where the vertex shader processes the vertices (e.g.: transforms) and the frag shader processes the pixel colors.

@haiyuem is onto the point that if vertex shader decides that there are no vertices, then in the frag shader we don't need need to render it in pixel shader. Another example would be, if in the vertex shader the vertex is out of the clipping plane, then there is also no need to render it.

@haiyuem @wanze Thanks for the responses! My question is: shouldn't the vertex shader be shared between all materials? For instance, doesn't determining whether or not a vertex is occluded not depend on the material? Whether a vertex is out of the clipping plane also shouldn't depend on the material right? So why is this part of the pipeline made customizable?

@kevtan If a vertex is not even visible on the screen, you won't care about what material it is.

Please log in to leave a comment.

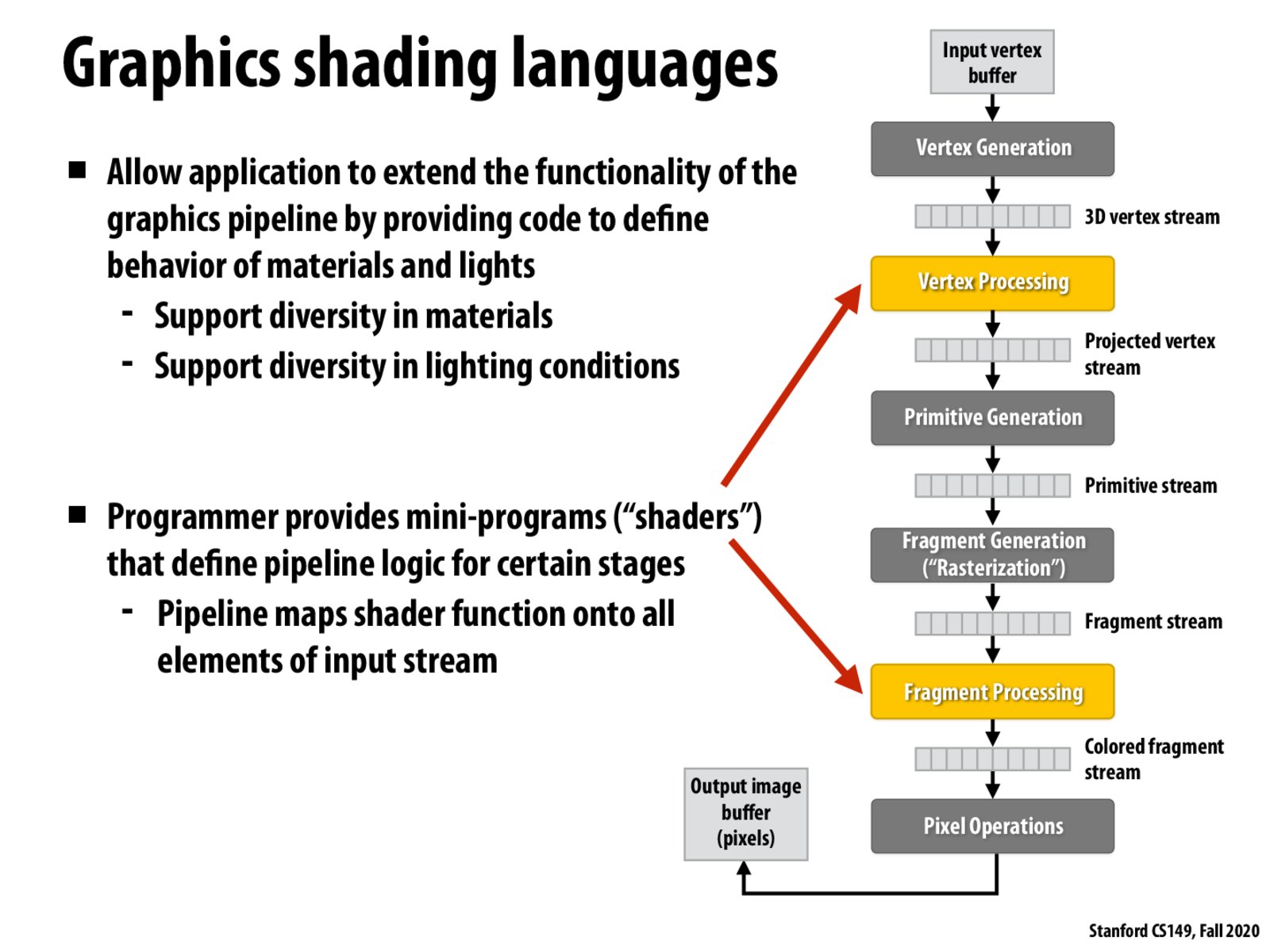

I understand why there's an arrow going into the "Fragment Processing" stage of the pipeline because it's the stage where the fragment colors are determined, but I don't understand why there's an arrow going into the "Vertex Processing" stage. As far as I know (a.k.a. slide 14), this stage is in charge of projecting the 3D geometry onto a 2D image plane. This seems to be something that should be the same for all graphics workloads. Changing the material shouldn't change the projective geometry, right?