Because Nvidia now also uses AI to predict pixel values in their image rendering, I'm wondering how their graphics pipeline changes. How would their pipeline change given some pixels are rendered with the traditional graphics pipeline, but some of their pixels or scenes are determined by some (presumably) parallel running AI algorithm?

@nickbowman I'm not sure if the pipeline is what enables the parallelism. I think the parallelism is intrinsic to the problem itself. The pipeline just seems to be a description of how the overall problem is broken down into smaller subproblems, which, as you mentioned, are all highly parallelizable.

@kasia4 Are you referring to Deep Learning Super-Sampling (DLSS)? I don't think NVIDIA has done away with traditional rendering. I believe DLSS is just another stage of the pipeline that goes after the traditional rendering stages.

"After rendering the game at a lower resolution, DLSS infers information from its knowledge base of super-resolution image training, to generate an image that still looks like it was running at a higher resolution." (source)

@kasia4 I agree with kevtan that NVIDIA still uses the traditional graphics pipeline. There might be additional layers of predictions/optimizations using DL.

Please log in to leave a comment.

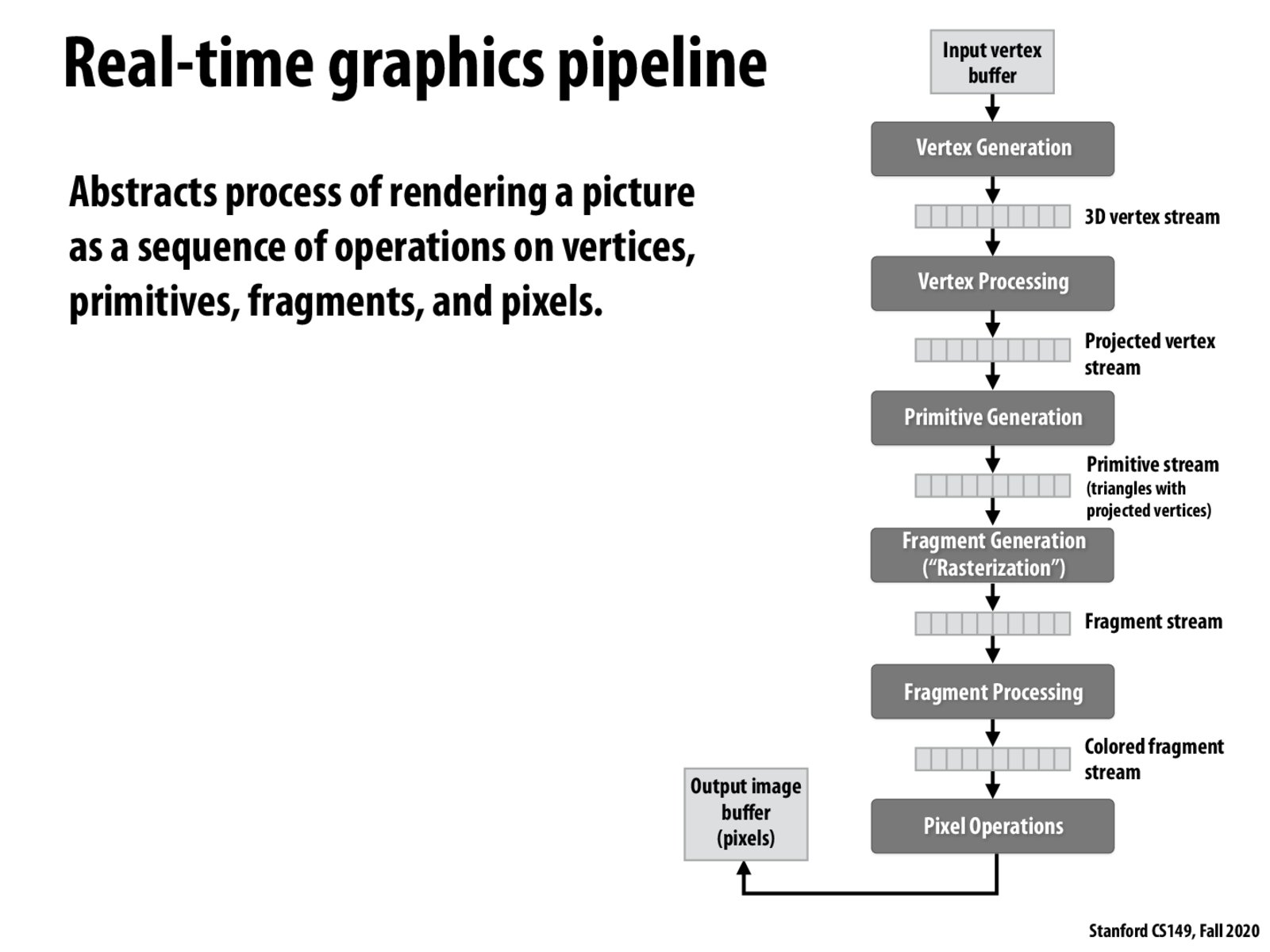

From my understanding of what was discussed in lecture, the benefit of framing graphics operations in terms of this pipeline is that each step in the process entails application of a function on millions of elements (vertices, triangles, pixels, etc.) that are for the most part independent from one another, which enables massive parallelism at each step of the process.