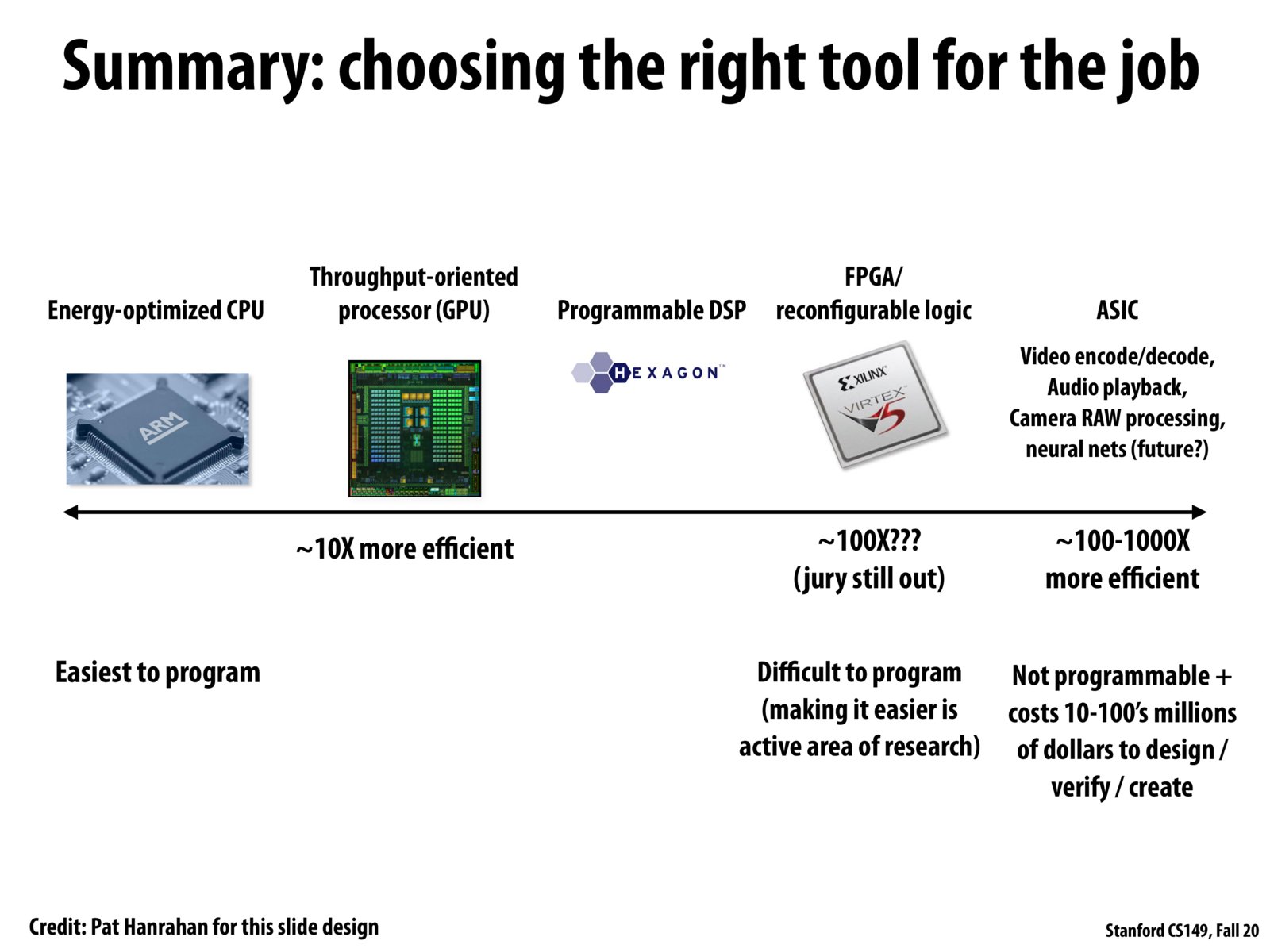

The evolution to more domain specific processing units is really cool to me, and seeing the new programming languages coming out that can allow for generating FPGA / ASIC designs in a language easier than verilog is pretty sick.

In a very real way, we're somewhat moving backward... We started out by building very specific hardware for very specific purposes (BOMB-1, EANIAC, etc), now we have highly generalized hardware that can be programmed for specific purposes, next it seems like we're moving towards more specific hardware that can be reprogrammed slightly, but lacks the large-scale generalizability of modern CPUs.

There is some interesting research going on currently for using FPGAs with large neural networks and computer vision models. The idea is, given a data set, to not only train a network to make accurate predictions on the data but to also train and evaluate quickly on a customised FPGA board. The Tomato framework does this and produces Verilog instructions to configure an FPGA to perform the desired classification very quickly. Additionally, due to space limitations on the FPGAs, it is often challenging to fit large networks onto such boards and so bitwidth optimisation is also employed on different levels to reduce large memory storage overhead for unimportant precisions in models.

To reduce storage overhead and inference runtime, people often use smaller bit widths for weight and activation tensors (8 bit for example), some papers (XNOR, BNN) are more aggressive and use 2 or 3 bits and use logic computation rather than DSPs on FPGA. This idea makes inference much faster on FPGA but suffers from accuracy loss on large datasets

My previous experience with programming FPGA using C in OpenCL exposed several drawback: 1. Programmability. It takes 1 minute to write code, 15 minutes to run CPU simulation, 12 hours to synthesize the circuit and place and route. Even after 15 hours of waiting, sometimes the circuit cannot be generated due to resource limitation or conditions like too many fanout. 2. Portability. The code has to be tuned for a specific FPGA board to maximize its resources. 3. Low clock frequency. The longest critical path in the circuit determined the highest clock frequency the FPGA can run with and it's very low when compiled from C compared to GPU.

FGPA works well in cases the application is so specialized that CPU and GPU cannot easily implement the feature like the BNN mentioned. Also the application can tolerate the lower clock frequency. More compiler research is needed to address the 3 problems I had.

Is a Programmable DSP a CPU with some specialized silicon to aid in FFTs and signal processing?

I wonder what makes FPGA compilers so slow. Surely it's not for lack of research effort?

I've recently seen a few applications being ported to FPGAs, or at least programmed with an "FPGA-first" mentality. However, it seems like they take a lot of effort. Personally, I find writing Verilog very challenging, but I wonder if it is just because we are typically taught computer science on general purpose processors, and if we had just started with programming FPGAs perhaps we would not consider it to be difficult/time consuming task?

I'm also curious about what exactly makes compiling for an FPGA a slow process. At a high level, it makes sense that the process would be slower because it involves "configuring the hardware" according to what the code says, but what does this mean exactly? How does the process work? And I'm sure a lot of research is going into expediting this process; what are the obstacles researchers face?

@tspint @donquixote the slow process is specific to digital circuit design on FPGA and the slowest part is called place and route. FGPA consists of lots of lookup tables or logic unit, you can think of them as NAND gates that can achieve any logic. After describing the logic using C or hardware description language, the circuit design tool needs to place these logic units on the actual board by turning switches on or off.

As I mentioned, the longest critical path in the circuit determined the highest clock frequency. You want to put the logic that depends on each other closest to each other and this optimization is a NP hard problem. So the tool spends hours to use heuristic algorithm to find the best circuit placement and occasionally it will fail and tell you this logic is impossible to be placed. In my own opinion, the programming experience could be much better if the FPGA company offers suboptimal optimization but worse performance for users to prototype.

You might wonder why such a process is not seen on CPU/GPU/ASIC. That's because engineers already spend lots of hours to perform circuit placement and validation before we get to use them.

@tspint Verilog is drastically different from programming languages because it describes how circuits are build. I find debugging circuit is much much harder than debugging software especially when there is race condition and you cannot simply put a lock around your circuit signal.

Stanford is working on a DSL (well.. it seems to be a Scala / Java library, but as mentioned in lecture the line between a library and DSL is blurred), that tries to make FPGA development more like traditional programming for a CPU. https://spatial-lang.org

Please log in to leave a comment.

FPGA is called field-programmable since it is designed such that after manufacturing, it would be configureable by a customer.

Sparkfun has some hands-on tutorials on programming FPGA: https://learn.sparkfun.com/tutorials/programming-an-fpga/all