So, openMP and CUDA are DSLs or libraries?

@Nian openMP is an API -- I don't believe its scope is specific enough to serve a particular domain optimally. Moreover, it offers quite a lot of functionality to developers at a low level, which isn't a desired characteristic of a DSL. I think that CUDA is loosely a DSL in the sense that it is a programming language tied to the GPU domain. However, I think it falls a little short in its high-level offerings -- as we saw in A3, you really needed to know your GPU in order to squeak out optimal performance. Ideally, DSL's are usually declarative, but I didn't think that CUDA tried to hide too much implementation from the developer.

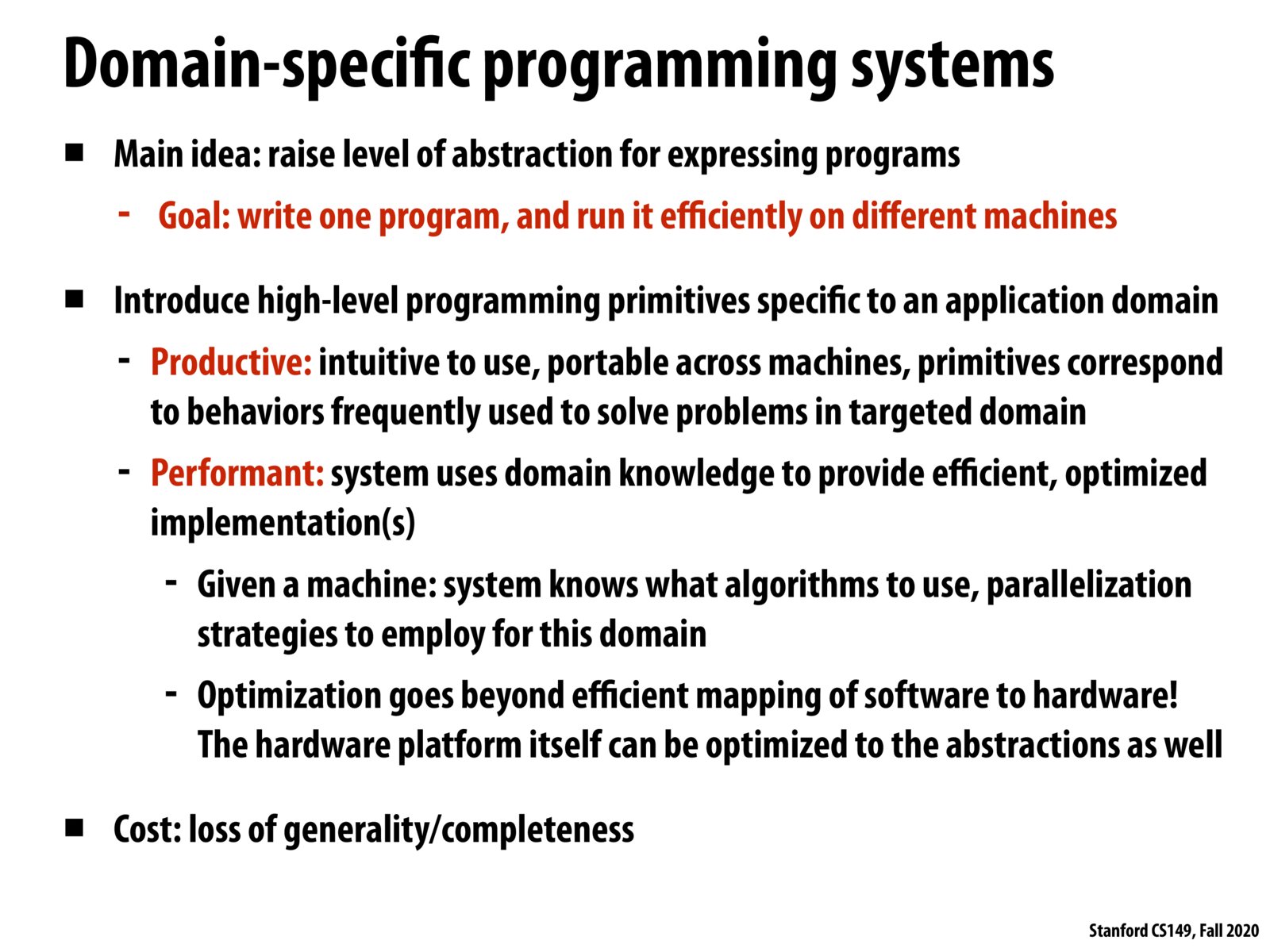

The goal of a DSL seems to be to provide a very powerful abstraction for a specific type of problem that both enables additional optimizations to be performed on code automatically and makes code portable across heterogeneous machines. This of course comes at the cost of generality. I am curious though, while this abstraction may be very useful to the programmer, is it not extremely challenging to implement for the DSL creator? I would imagine the DSL compiler author would need to have deep knowledge of a very wide range of hardware architectures in order to ensure the generated code runs efficiently on all of them.

Can DSLs take advantage of special hardware? e.g. if a chip has hardware to do some kinds of processing, will the DSL be able to utilize that in a general way? Is this just up to the compiler to be able to do fancy stuff based on the target chip/architecture? I'm thinking that as the hardware moves along the spectrum from CPU to ASIC, the code might need to lean on the hardware more and more?

As mentioned above, I understand the main goal of DSL's being to maximize productivity and performance while throwing away generality. A relevant example which demonstrates this would be the embedded DSL, Pytorch, which is exceptionally performant in what it does(ML/Matrix Operations) while also providing abstraction for developers who understand the rich mathemathical knowledge needed to achieve their ML/AI needs to rapidly prototype and test. Note that the loss of generality can be demonstrated with the fact that Pytorch in itself does not offer any functions that can be used in the general sense(functions mainly deal with matrix operations)

Responding to the first poster, DSLs can be embedded in host languages (like python or C++) which makes learning new syntax less of an issue. It's also not clear to me whether something like ISPC should be classified as a language or a library.

I feel confused when reading the "run it efficiently on different machines". So this is not that kind of generality that DSL gives up, right? The generality DSL gives up is that it cannot solve all kinds of problem in the same language. But it can guarantee to solve some specific problems on heterogenous architectures.

@icebear101 I think being able to run on different machines is the productive part, and running efficiently if the performance part. I agree with you that generality refers to the ability to solve all kinds of problems.

Please log in to leave a comment.

DSLs give up generality for the sake of productivity and performance. To me, it seems like productivity isn't necessarily the biggest "win" from DSLs (because the programmer has to learn a new system/framework), but performance can be greatly improved due to domain-specific optimizations on software and hardware levels. Later in the lecture we look at Halide, an image-processing DSL that automatically optimizes statements as long as the programmer has a high-level idea of how to parallelize the program, and the application belongs to the image processing domain.