I can see how AI is pushing the boundaries of computing, it seems like every component of the computer (memory, bandwidth, cache size, processing capability, etc) is being pushed to its limits by these large-scale machine learning algorithms.

Does hardware tend to go obsolete quickly? A common joke in the computer building community is that your top-of-the-line computer will be obsolete in five years due to advancements in CPUs, GPUs, etc. (e.g. NVIDIA recently releasing the RTX 3070 as a $500 graphics card than performs about the same as the 2080 TI, a $1k+ high-end graphics card from 2018). I'm curious if this is an issue companies face, or if supercomputers are built to last for a considerably large amount of time without going obsolete (in comparison to other systems built by rival companies).

In this class we focused a lot on fitting things into memory/cache and lower the communication cost between machines. I wonder if there are any setting where using faster hard drive like flash storage is still significantly important?

Please log in to leave a comment.

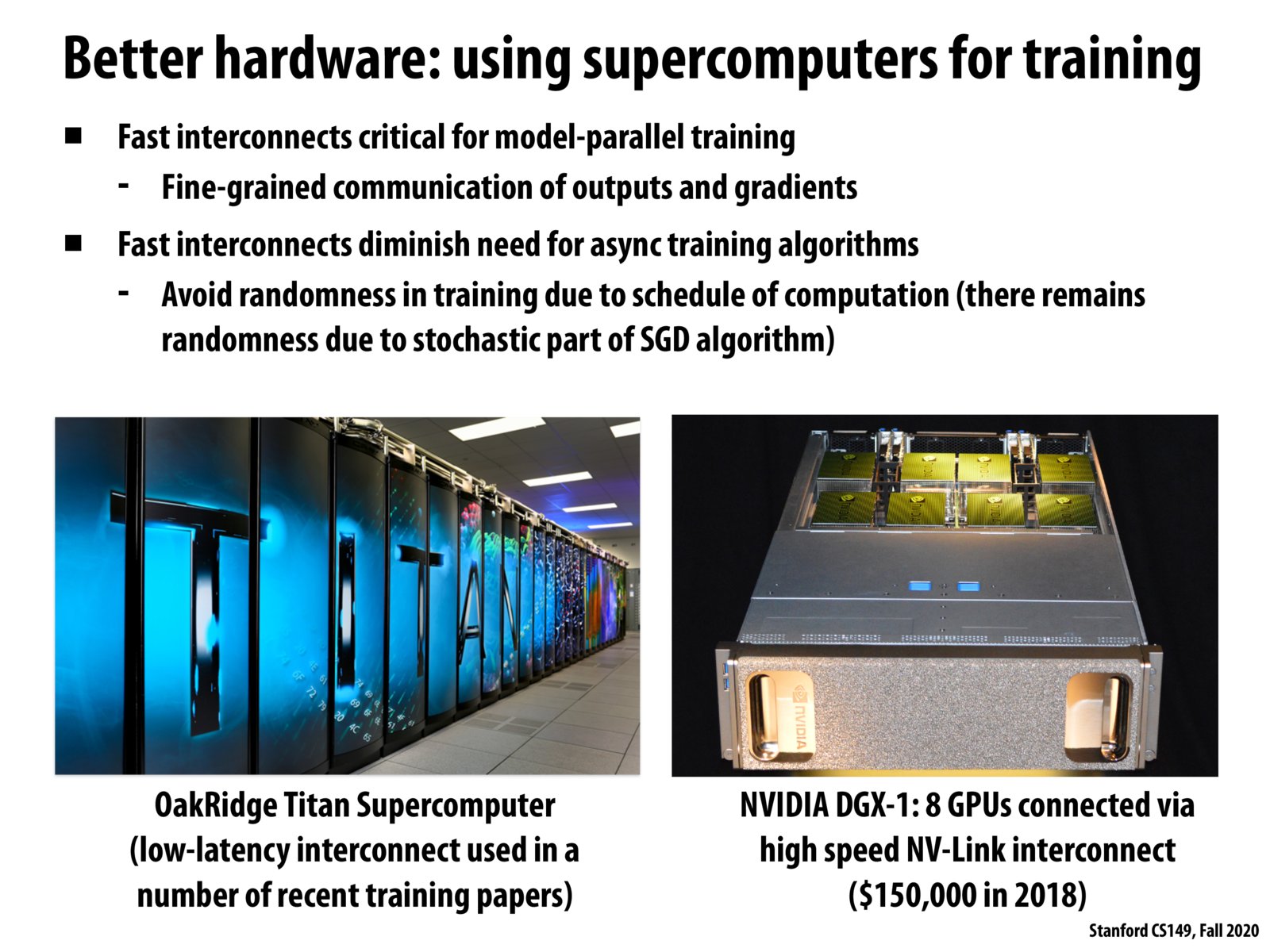

To summarize Kayvon: It's expensive to communicate between multiple machines; to solve this problem hardware architects build better networks using custom fast interconnects such as NVIDIA's NV-link. The trade off is monetary cost of these faster interconnects.