@mkarra I think the problem with updating weights on the entire dataset each time is more with memory usage - usually it's impossible to load the entire dataset into the memory. Regarding the speed, I personally think SGD takes even longer time than GD.

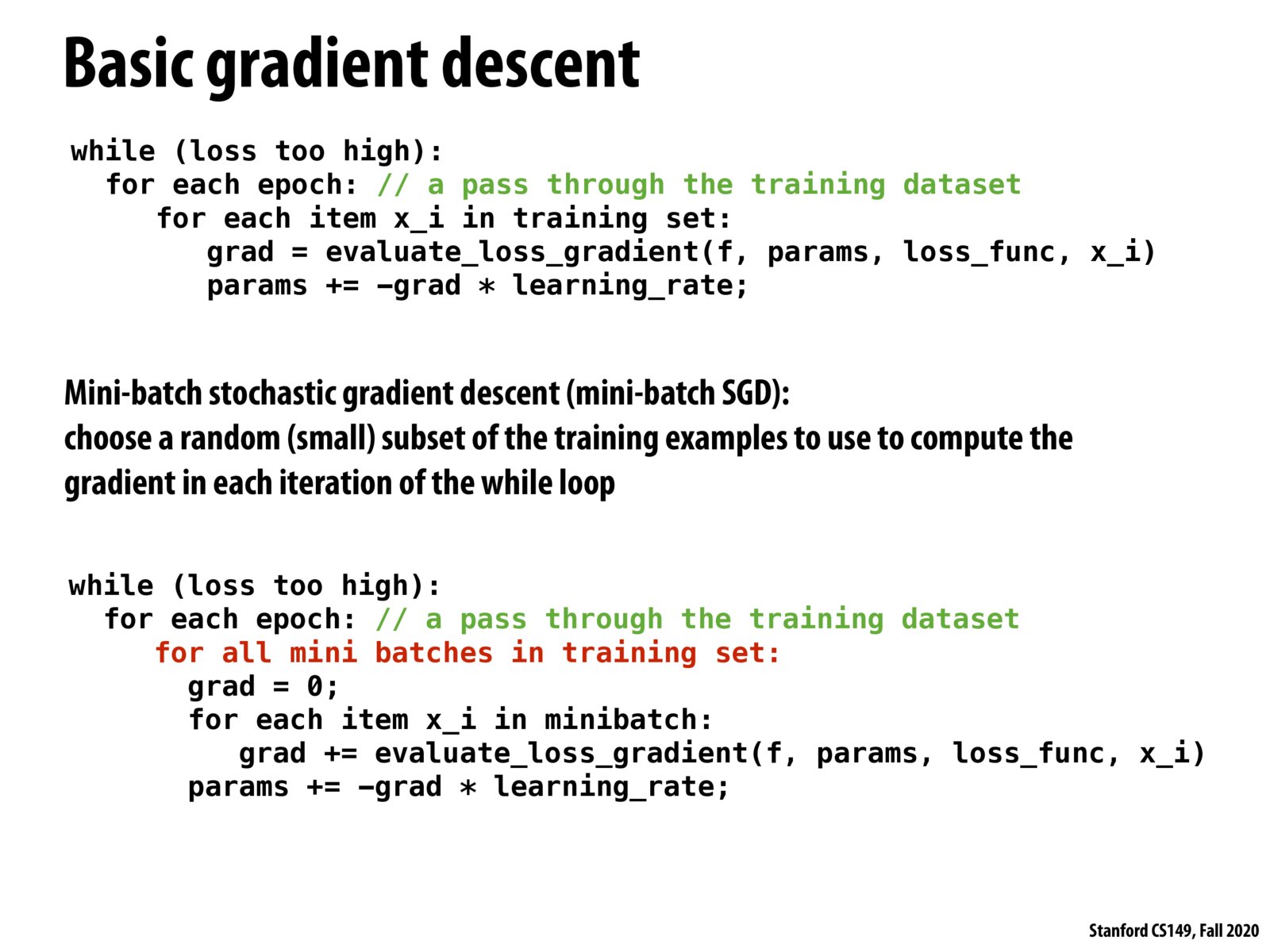

I agree with @jt that the mini-batch SGD is probably much better in terms of memory usage because it breaks up the training set into mini batches and the memory system can discard each mini batch as it finishes using it. Also, as we see in later slides, the mini-batch setup can be parallelized by batch and executed asynchronously. I think that both examples on this slide are SGD where the first one is SGD over one example at a time while the second is mini-batch SGD. GD iterates over all samples in the calculation of the gradient itself, and there is one single update per epoch.

Just am add-up, if update on the whole dataset every time, the training loss will decrease monotonously.

It is somewhat interesting that things like this may have similar inefficiencies to say, when we did mandelbrot with ISPC in a previous assignment. Although some elements may have converged and no longer contribute per loss, repeat work might need to continue until the error is satisfactory, and calculations kind of end up being wasted

Please log in to leave a comment.

Looping through all the data and updating every weight every time is very slow, so we use stochastic gradient descent to randomly select a batch of the training data that we use to update weights.