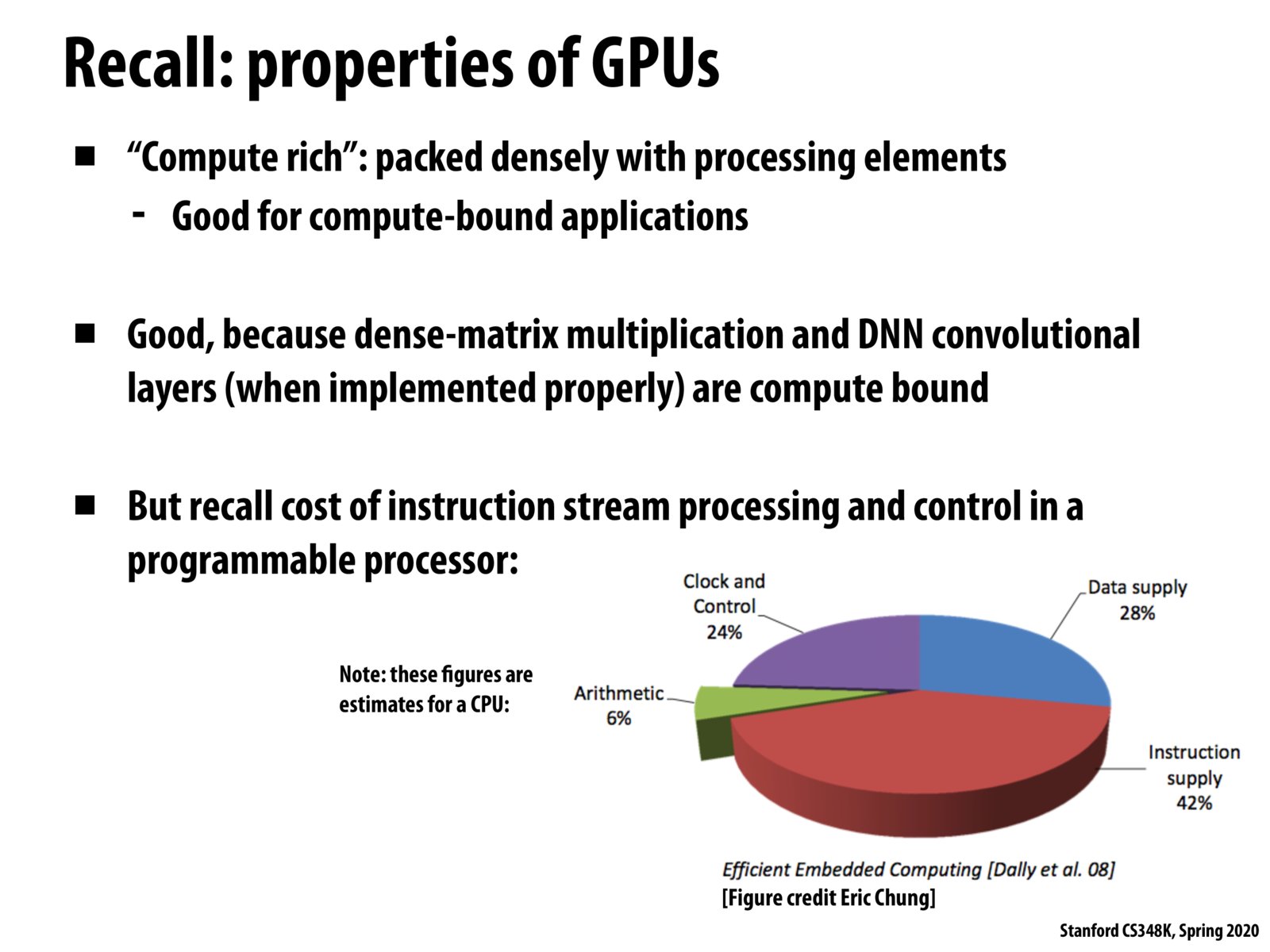

Similar to many trending and "hot" fields in computer science today, the effectiveness of DNNs are leading towards a growing demand for better efficiency with the operations(matrix operations) underlying its implementation. Despite the general consensus of the use of GPUs being extremely useful tool to increasing the performance of operations in DNNs(due to high capacity for parallelization), a larger focus has been placed on specialized hardware(hardware accelerators) which are designed to maximize the compute operations of DNNs which in a GPU would be bogged down by the overhead needed to support flexibility for other tasks

I have always wondered: what exactly are we looking at when we use (h)top or nvidia-smi to see the CPU/GPU utilization percentage? For example, with interleaved multithreading on Intel CPUs its clearly impossible to have all threads be running at 100%, but sometimes this is what (h)top will show. What is the right way of interpreting these numbers, i.e., what counts as "utilization" and what doesn't?

@anon33 I am not sure about the specifics of how htop interacts with interleaved multithreading but I believe the utilization distinction you mentioned is that waiting for a memory access does not count as utilizing the thread, but performing some kind of arithmetic operation does. This means that you can see quickly whether the current workload is cpu/memory/disk bound by looking at which has the highest utilization.

Please log in to leave a comment.

To summarize what we learned in lecture, GPUs can be good for DNNs because DNNs are essentially computing matrix multiplications with the conv layer calculations. This is repeated computation which can be parallelized. In addition, you can add SIMD as well, which the GPU supports. Arithmetic intensity is high. However, the argument against using a GPU has to do with the concepts we have been learning in lecture recently - we can just use something that is specifically tailored towards DNNs instead. This is kind of similar to the idea of DSLs except that the specificity can be baked into the hardware, not just the software. For example, Google TPU can be used to run DNNs more efficiently.