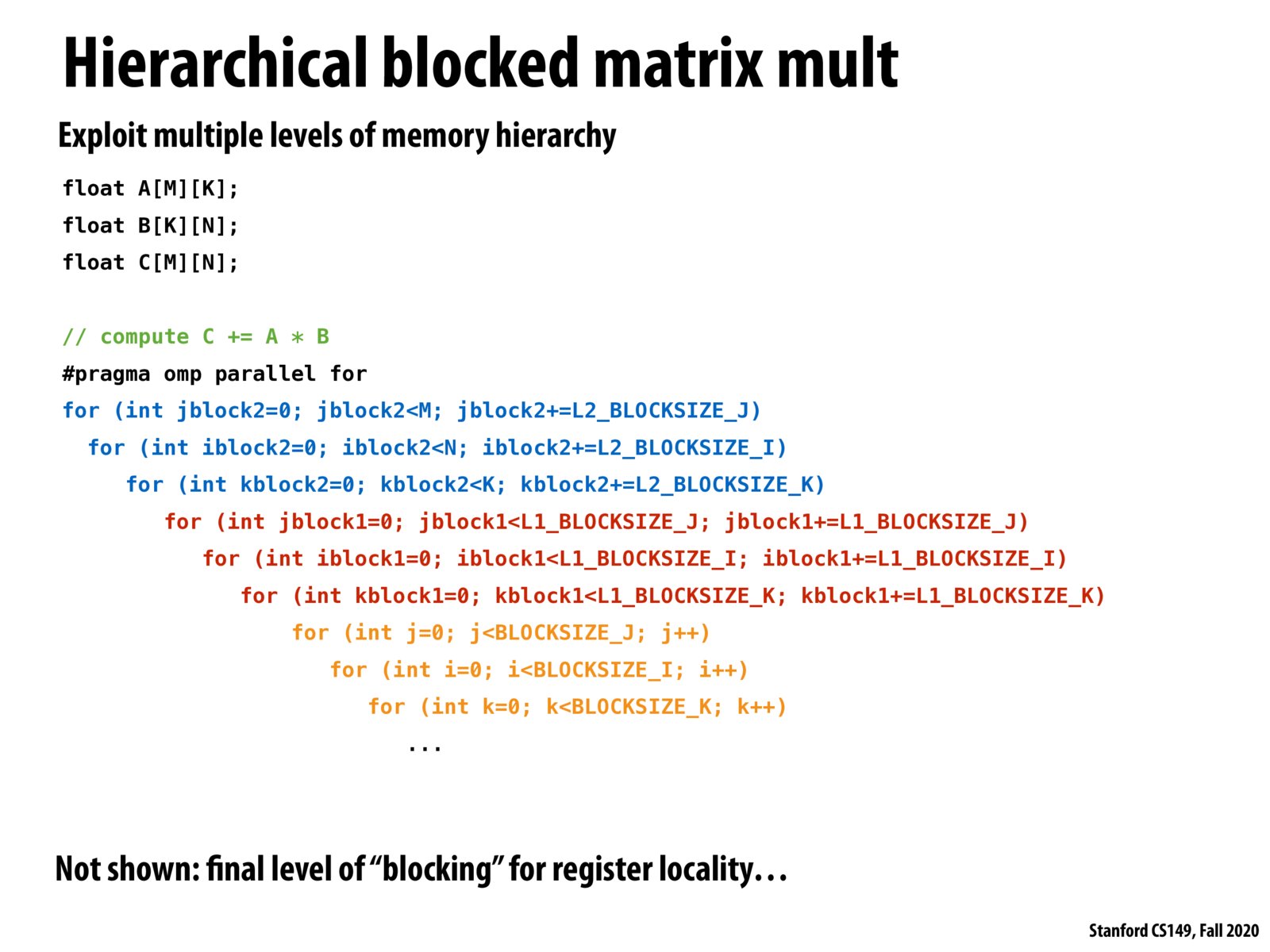

Is this assuming there is no L3 cache (or maybe just now shown?). Is there any intuition behind the relative importance of blocking for different levels of cache? I think doing it for the last-level cache is quite important, as we should ensure blocks fit in each L3 cache line to avoid going out to memory which is very expensive. But what about L2 vs. L1? If we don't want to exploit all levels of the memory hierarchy, would it be better to prioritize certain levels?

@tspint yeah this slide is just an example that you can do it with multiple levels of blocking. I think in office hours Kayvon mentioned that you'll often see L3 and L1 prioritized, since L3 is important to avoid going out to main memory as much as possible, and having too many blocking levels can be confusing and have diminishing returns.

Please log in to leave a comment.

The intuition behind this is that we can load a larger block into the L2 cache (e.g. 128x128) and then split that into smaller blocks (e.g. 16x16) to store in the L1 cache. Then, rather than going back to main memory once we finish computing the small block, we only have to go to the L2 cache to get the next 16x16 block until we finish computing the entire 128x128 block, at which point we load the next 128x128 block from main memory into L2.